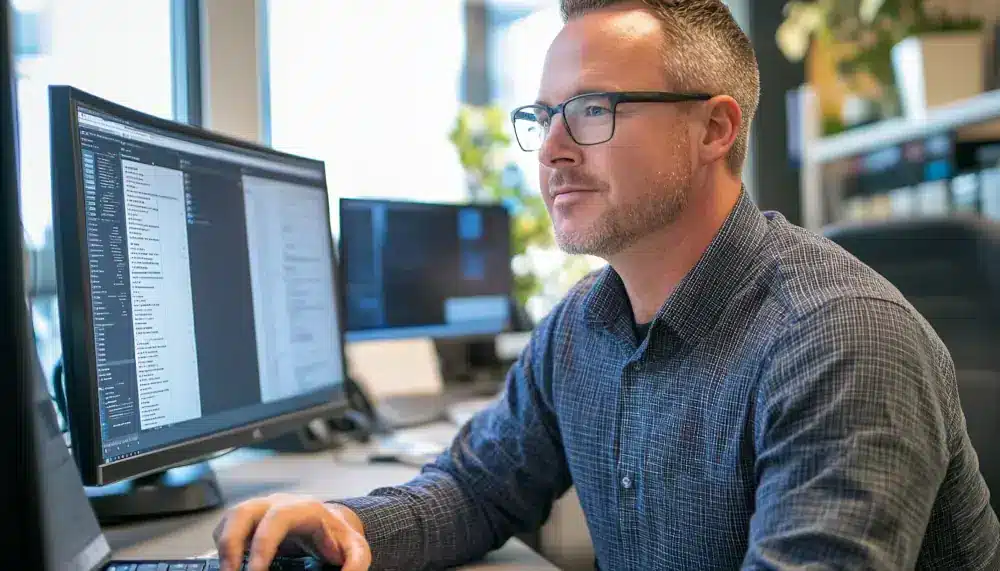

New technologies generate massive amounts of data every day. One of the biggest challenges for companies is to efficiently manage, monitor, and deliver this data to ensure a reliable data infrastructure for strategic decision-making. This is where the Data Ops Engineer comes in – a key role in Data Engineering that ensures the smooth operation and automation of data processes.

To identify the key skills for a Data Ops Engineer in 2024, we surveyed 25 Data Managers from leading companies. The most frequently requested skills are:

✅ Data integration and workflow automation

✅ SQL, NoSQL, and database management

✅ DevOps and DataOps tools (Kubernetes, Terraform, Airflow, dbt)

✅ Cloud platforms and big data technologies

✅ Soft skills: problem-solving, communication, and systems thinking

The goal of a Data Ops Engineer is to ensure a powerful, scalable, and stable data infrastructure. The best way to achieve this? Practical training that equips you with the most in-demand skills and tools.

🔍 Explore the role of a Data Ops Engineer in detail: tasks, core competencies, career prospects, and salary expectations. Or read our blog article by clicking here. 🚀