Huffman coding is a data compression algorithm. The idea is to assign variable-length codes to the input characters, the length of the assigned codes being based on the frequency of occurrence of the characters in the initial sequence.

Huffman coding is a lossless data compression algorithm. The algorithm was invented in 1952 by David Albert Huffman. Huffman coding is generally important for compressing data containing frequently occurring characters.

The frequency factor is taken into account here because of the notion of information entropy, since the more frequent a character is, the higher its entropy.

How does Huffman coding work?

The principle of Huffman coding is based on the construction of a tree structure with nodes.

- This algorithm is based on the idea that the best way to code information is to find the optimal length to represent each character in the initial sequence.

- The optimal length of the code corresponding to a character is given by the number of branches that need to be traversed from the top of the Huffman Tree to arrive at this character, which will always be a leaf of the Huffman Tree.

The idea behind the Huffman Tree is to minimize the length of the symbols used to encode a message, which in a way means minimizing the size of the tree. But how is the Huffman tree constructed?

What is the principle of Huffman coding?

As for the construction of the tree, we associate each time the two nodes with the lowest weights, to give a new “father” node whose weight is equivalent to the sum of the weights of its children.

This process is repeated until only one node remains: the root of the Huffman tree, whose weight is the sum of the weights of all the initial leaf nodes.

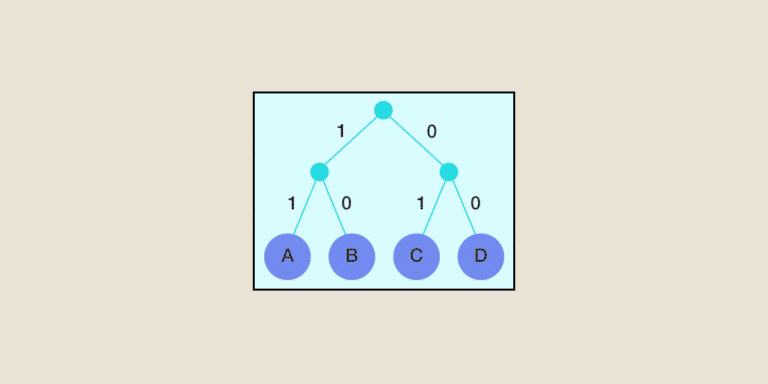

We then associate, for example, code 0 with each left-hand branch and code 1 with each right-hand branch.

To obtain the binary code of each character, we run through the tree from top to bottom, each time taking the 0 or 1 character of the branches we pass through, depending on whether we’re going right or left.

The different stages in the construction of the Huffman Tree

- Construct leaf nodes from each character of the message to be coded, each character being taken into account only once with the different frequencies of appearance of the characters to be arranged in order of priority, the highest priority being those with the lowest frequencies.

- Extract the two lowest-weight nodes in ascending order of frequency, i.e. the node with the lowest frequency should be extracted first.

- Create a new internal node with a frequency equal to the sum of the frequencies of these two nodes. Define the first node extracted as the left-hand child of this node and the second node as the right-hand child. Add the newly created node to the list of remaining nodes.

- Repeat steps 2 and 3 until you have passed through all the nodes obtained in step 1. The remaining node is the root of the tree and construction is complete.

An example to illustrate coding

To illustrate the idea of Huffman coding, we’ll consider the message below, which we’ll optimally represent in binary form:

aabbccddbbeaebdddfffdbffddabbbbbcdefaabbcccccaabbddfffdcecc

For a message to be coded like this one, let’s start by counting the frequency of distinct characters in the message. After counting, we obtain the following result:

a:8, b:15, c:11, d:12, e:4, f:9

We will implement the Huffman coding algorithm on this example.

The first step is to use the least frequent characters in the message to be coded. We’ll start with the letters ‘e’ and ‘a’, which have the lowest frequencies, then sum their weights, i.e. their frequencies of appearance, in order to replace the two characters with a new single character whose frequency will be the sum of the frequencies of these two characters in the list. At the end of this first step, the list becomes :

new node:12, b:15, c:11, d:12, f:9

Then, from the new list obtained, choose the two elements with the lowest weights, and iterate the process until you obtain the node with the highest weight, i.e. with the weight of the sum of the frequencies of all the characters. Note that at the end of the Huffman tree construction, all the distinct characters of the initial message to be coded are leaves of the Huffman tree. At the end of the run, we obtain the following results:

d -> 00

e -> 010

a -> 011

b -> 10

f -> 110

c -> 111

What is Huffman coding used for in working life?

The various applications of the Huffman coding algorithm for data compression include fax and text transmission, conventional compression formats such as GZIP, and multimedia software such as JPEG, PNG and MP3.

In general, data compression is useful for increasing memory storage capacity or data transmission speed.

There are a number of variations on Huffman coding when creating a tree.

- Either the dictionary is static: each character has a predefined code that is known or published in advance (and therefore does not need to be transmitted);

- or the dictionary is semi-adaptive: the content is analyzed to calculate the frequencies of each character, and an optimized tree is used for coding (it must then be transmitted for decoding);

- Or the dictionary is adaptive: starting from a known tree (published beforehand and therefore not transmitted), it is modified during compression and optimized as it goes along. The computation time is much longer, but often offers a better compression ratio.

Ultimately, Huffman coding is a very useful data compression algorithm with many applications today, from increasing storage capacity to increasing data transmission speed. There are several variants of this data compression technique, including the dynamic variant, which is an improvement on the static variant presented in this article.