Unbalanced data is very common in Machine Learning. Unfortunately, they complicate predictive analysis. So to balance these data sets, several methods have been implemented.

How to manage unbalanced data with resampling?

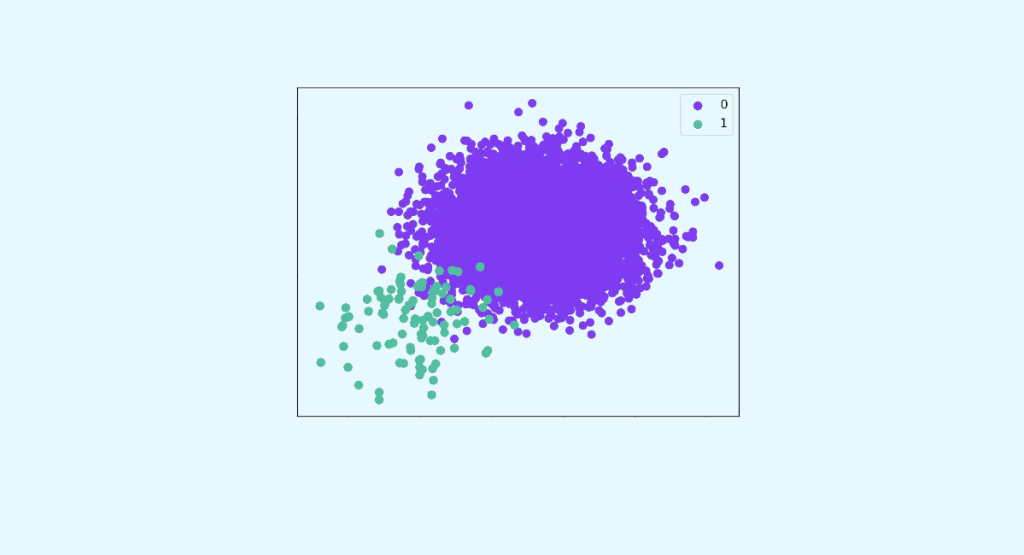

Unbalanced data are characterized by samples where a strong disparity is observed. For example, the ratio between classes is not 50/50, but rather 90/10. It is from this point on that data will pose problems in Machine Learning and Deep Learning.

This often refers to relatively rare events, such as insurance fraud or the detection of disease. For example, in a population, a large majority may be healthy, and only 0.1% may have multiple sclerosis. The healthy people are then the majority class and the sick people are considered the minority class. While these events are quite common, they represent only a tiny fraction of the sample studied. Thai is why it could be difficult to predict them

In Machine Learning, unbalanced data are very common, especially for binary classification. This is why several methods are being developed to better manage unbalanced classification problems. In particular through data resampling.

What are the two resampling methods?

Resampling consists in modifying the data set before training the predictive model. This balances the data to make the prediction easier. For this purpose, there are two resampling methods.

Oversampling

This involves increasing the data belonging to the minority class until reaching a certain balance. Or at least a satisfactory rate to make reliable predictions.

Data Scientists can use two resampling methods. To wit:

- Random oversampling: here, the minority data are cloned several times at random. This technique is especially useful for linear models, such as logistic regression.

- Synthetic oversampling: the idea is, again, to add data from minority classes. But instead of copying them identically, the algorithm creates separate but similar data.

Subsampling

On the contrary, undersampling will decrease the data from the majority class to balance the ratio.

Random undersampling is mainly used. This means that the majority of data are removed randomly. This resampling technique should be preferred when you have large data sets (at least several tens of thousands of cases).

If this method is the most common, you can also use undersampling of border observations or clustering-based undersampling. That is to say, specifically remove certain majority data.

In both cases, it is not necessary to obtain a perfect 50/50 balance. It is possible to remove majority data or add minority data to obtain a ratio of 45/55 or even 40/60.

In addition, do not hesitate to test several class ratios to select the one that will give you the best prediction performance.