GPT-4, PaLM 2, BARD... advances in artificial intelligence are multiplying all the time. And above all, they are becoming accessible to all.

Not just data experts, but also business leaders, marketing managers or even students looking for a little help.

However, using these generative AI solutions doesn’t just happen. The democratization of these solutions shows the importance of knowing how to optimize results.

This is where Prompt Engineering and Fine-Tuning come in. So what are they? What are the differences between these two concepts? That’s what we’re going to find out.

What is Fine-Tuning?

Despite their name, AI models are not “intelligent”. Behind this supposed intelligence lies an ability to find relationships in large, complex data sets. But data volumes are such that large language models (LLMs) depend on optimization techniques. These include fine-tuning.

Definition of Fine-Tuning

Inherent in model pre-training, this technique aims to reinforce the specialization of a model that has been trained on a wider distribution of data. For there are models capable of performing generic tasks.

And others capable of performing specific tasks. It’s precisely in the latter case that Fine-Tuning comes into its own. The idea is to respond specifically to the user’s needs. For example, a customer support chatbot for a clothing company will not be trained in the same way as a chatbot tasked with generating medical pre-diagnoses.

The aim of Fine-Tuning is to optimize the performance of an existing model by re-training it on specific data. By adjusting the model’s weights and parameters, this technique enables an AI system to adapt to more specific tasks.

Successful Fine-Tuning

To improve AI system performance through Fine-Tuning, special attention needs to be paid to two parameters:

- Data quality: to respond to specific tasks, the datasets presented must also be specific.

- Training steps: it’s not just a question of training the model to contextualize the data, but to guide it towards the best results. For this, it is necessary to set up a feedback system through human evaluations.

What is Prompt Engineering?

Like Fine-Tuning, Prompt Engineering also aims to improve the performance of the machine learning model. But here, the focus is not so much on training the model, but rather on the results provided by prompts.

As a reminder, prompts correspond to a user’s requests to a generative artificial intelligence application (such as ChatGPT). And it’s precisely the quality of these requests (the prompt inputs) that determines the quality of the result (the output).

In concrete terms, when you enter a query, artificial intelligence breaks down the prompt to understand its meaning and intent.

If you ask a fairly general question, the AI system will provide you with an answer based on the data it has at its disposal. But since it may not have fully grasped your intention, it may give the wrong answer. Conversely, if you provide a precise prompt explaining your intention and the context, the AI will be better able to respond to your request.

Prompt Engineering therefore involves guiding the responses of ML models by formulating specific queries. For successful prompts, don’t hesitate to ask more and more specific questions, and to test different ways of formulating instructions.

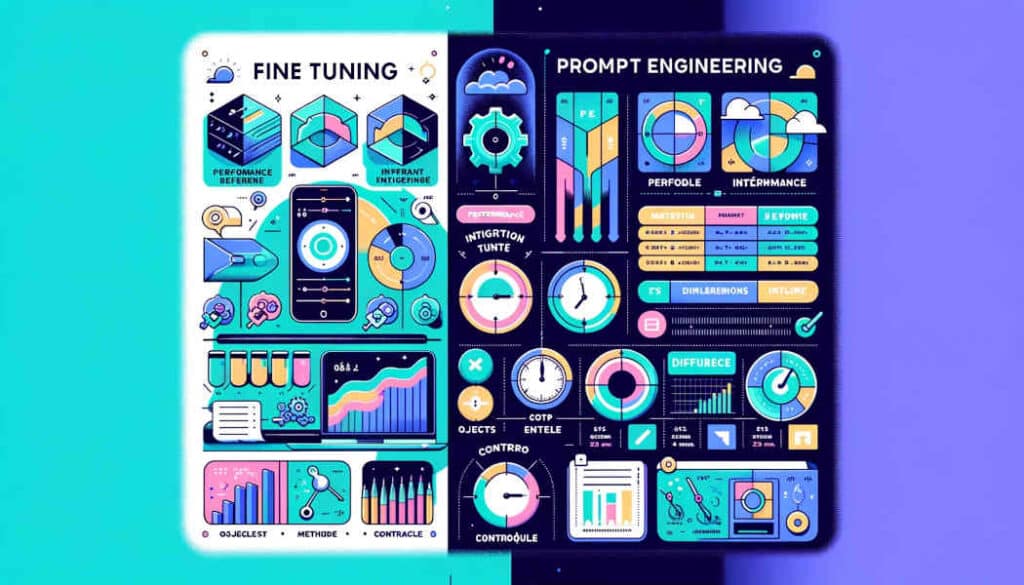

Prompt Engineering vs Fine-Tuning - What are the differences?

Fine-Tuning and Prompt Engineering are two AI optimization techniques. However, it is important to understand the differences between these two methods:

- The objective: Prompt Engineering focuses more on producing relevant results, whereas Fine-Tuning aims to improve the performance of the machine learning model to accomplish certain tasks.

- The method: Prompt Engineering is based on input indications. These need to be more detailed to achieve better results. Fine-tuning, on the other hand, is based on training a model and integrating new, specific data.

- Control: with Prompt Engineering, the user retains full control over the results. Fine-tuning, on the other hand, aims to give the computer greater autonomy to produce the desired results.

- Resources: Prompt Engineering does not necessarily require resources. Especially as many generative AI applications are free. So anyone can fine-tune their prompts to optimize results. Fine-tuning, on the other hand, requires substantial resources, both for training models and for adding large volumes of data.

How do you master Fine-Tuning and Prompt Engineering?

Although distinct, Fine-Tuning and Prompt Engineering share a common goal: to improve the accuracy and relevance of answers generated by generative AI models. For data experts, it is more than necessary to master these techniques. That’s where DataScientest comes in.

Through our training program, you’ll discover the best techniques for refining the results of language models. Come and join us!