Drawing on the most effective safety measures and its expertise in AI, Google creates the SAIF system. This regulation establishes industry-wide guidelines for creating and deploying safer AI.

Safer AI?

To enable the creation of high-quality, secure artificial intelligence, Google has decided to implement the SAIF regulation. These regulations will apply from the moment AI is conceived, to regulate its potential.

To design SAIF, Google drew inspiration from the best security protocols in software creation. To ensure the protection of training data, SAIF will also include requests for reviews and tests on supply chains, like cybersecurity practices.

Its aim is to restore user confidence in AI, by specifying that a model recognized by SAIF is a secure and reliable model.

What are the SAIF regulations?

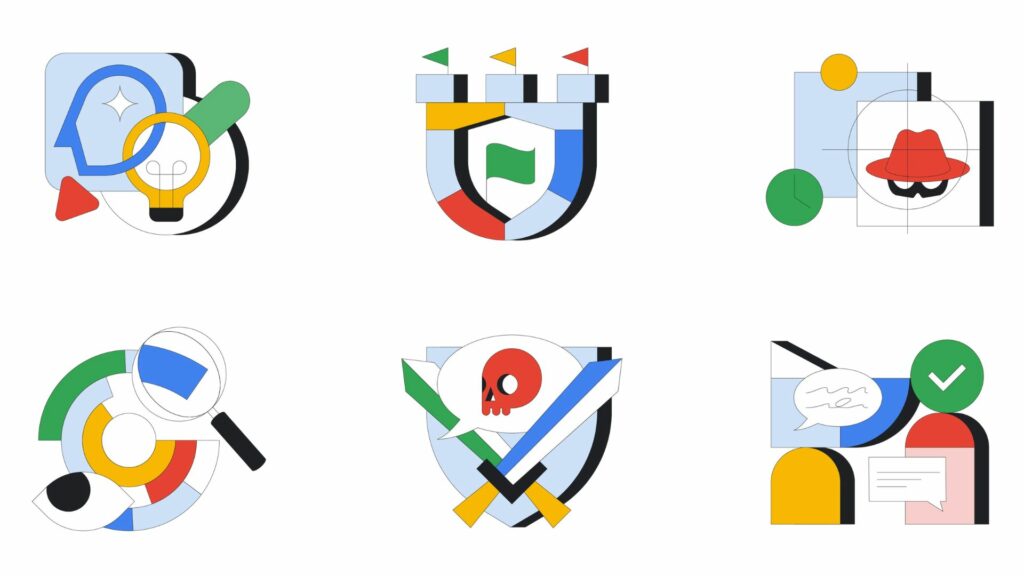

SAIF is based on 6 principles:

- Expand strong security foundations to the AI ecosystem: Google leverages its secure-by-default infrastructure to protect AI systems, applications, and users. Organizations can stay ahead of potential vulnerabilities by continuously adapting infrastructure protections to evolving threat models.

- Extend detection and response to AI-related threats: Timely detection and response to cyber incidents are critical in safeguarding AI systems. Integrating threat intelligence capabilities into an organization’s security framework enhances monitoring, enabling early anomaly detection and proactive defense against AI-related attacks.

- Automate defenses to counter emerging threats: AI innovations can enhance the scale and speed of response efforts against security incidents. Employing AI itself to bolster defense mechanisms allows organizations to efficiently protect against adversaries who may exploit AI for malicious purposes.

- Harmonize platform-level controls for consistent security: Consistency in security controls across different platforms and tools ensures uniform protection against AI risks. Organizations can scale up their AI risk mitigation efforts by leveraging a harmonized approach to security controls.

- Adapt controls to enable faster feedback loops: Constant testing and learning are vital in adapting AI systems to the evolving threat landscape. Organizations should incorporate feedback loops that enable continuous refinement, such as reinforcement learning based on incidents and user feedback, fine-tuning models, and embedding security measures in the software used for model development.

- Contextualize AI system risks in business processes: Conducting comprehensive risk assessments considering the entire AI deployment process is crucial. Organizations should evaluate factors like data lineage, validation processes, operational behavior monitoring, and automated checks to ensure AI performance meets security standards.

SAIF regulations could be the solution to the AI crisis, seen as a potential threat to the future of humanity by many scientists and business leaders, including Sam Altman, CEO of OpenAI. To ensure that AI assists, not replace, humans, data teams need to be constantly trained in the latest discoveries and innovations emerging daily. That’s why, if you’ve enjoyed this article and are considering a career in Data Science, don’t hesitate to discover our articles or training offers on DataScientest.

Source : blog.google