ChatGPT, now you see why?

GPT stands for ‘Generative Pre-trained Transformer’ (for the French variant), and it’s a very clear name that perfectly summarizes its operation!

How come you’re not convinced?

Imagine GPT as a super-expert in word prediction, its main talent is guessing which word would most likely complete the beginning of a sentence. By repeating this prediction over and over, word by word, it builds entire sentences, paragraphs, and even complete articles!

It helped a bit in writing this one, but there’s still a human behind it…

Let’s detail together the different steps of operation to see how GPT learns to understand how human language works.

Step 1: Transforming Words into Numbers

Computers don’t understand words like we do. For them, “cat” or “house” are just sequences of letters; for a machine to work with words, they need to be transformed into numbers.

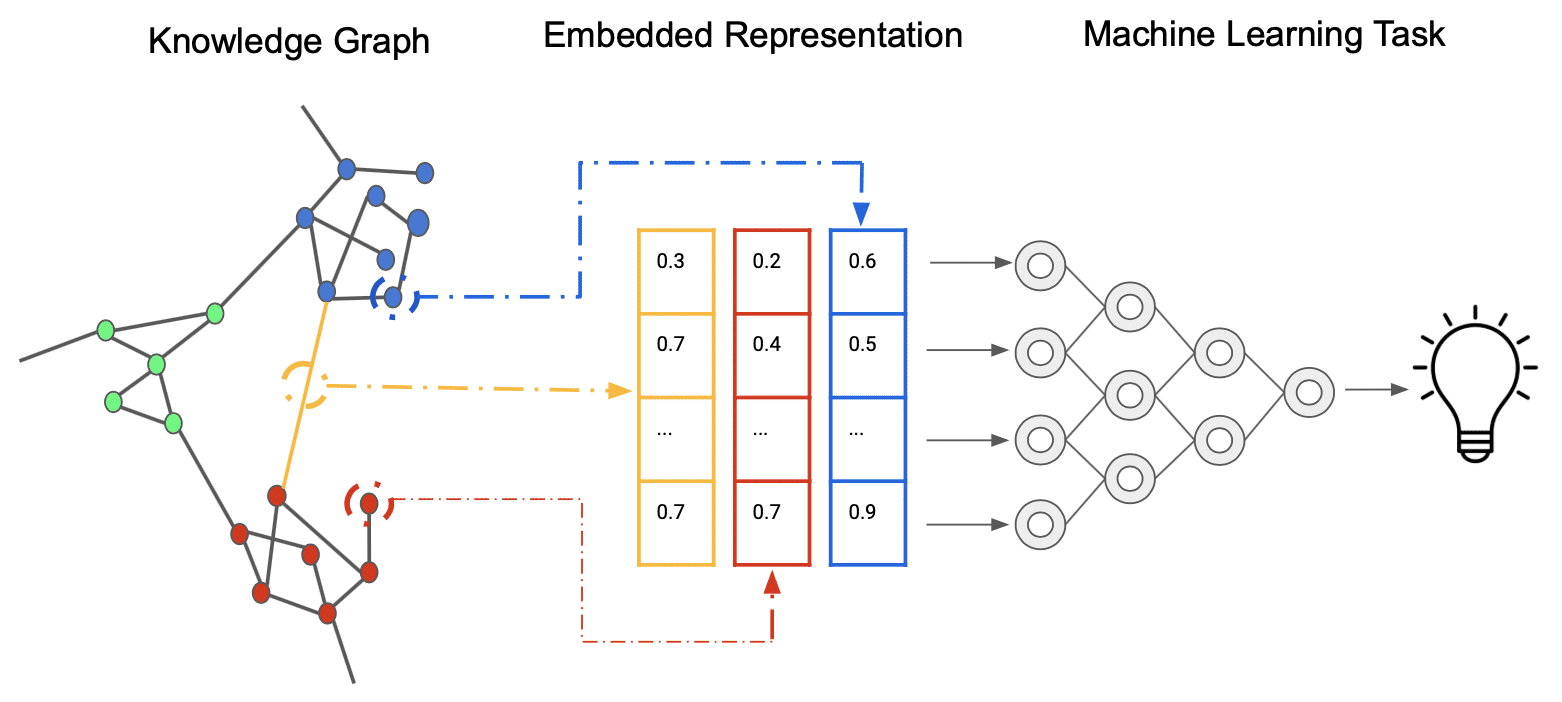

This is the role of Embeddings. Imagine that each word in the language (or almost) receives a unique secret code, which is a list of numbers.

Think of a huge library. Each book (each word for GPT) gets a special label with a unique barcode (the embedding vector). This barcode isn’t just an ID number; it contains hidden information about the book.

For example, the barcodes of science fiction books might look similar, as do those of cookbooks, and if they are science fiction books that also talk about robots, their barcodes would be even closer!

The more two words have a similar meaning, the “closer” their list of numbers (their embedding vector) will be in an imaginary space. For example, the vectors for ‘king’ and ‘queen’ will be very close, and the difference between the ‘king’ vector and ‘man’ will be similar to the difference between ‘queen’ and ‘woman’! Pretty handy, right?

The first thing GPT does when you give it text is to look at each word and find its corresponding list of numbers (embedding vector) in its “secret code table.”

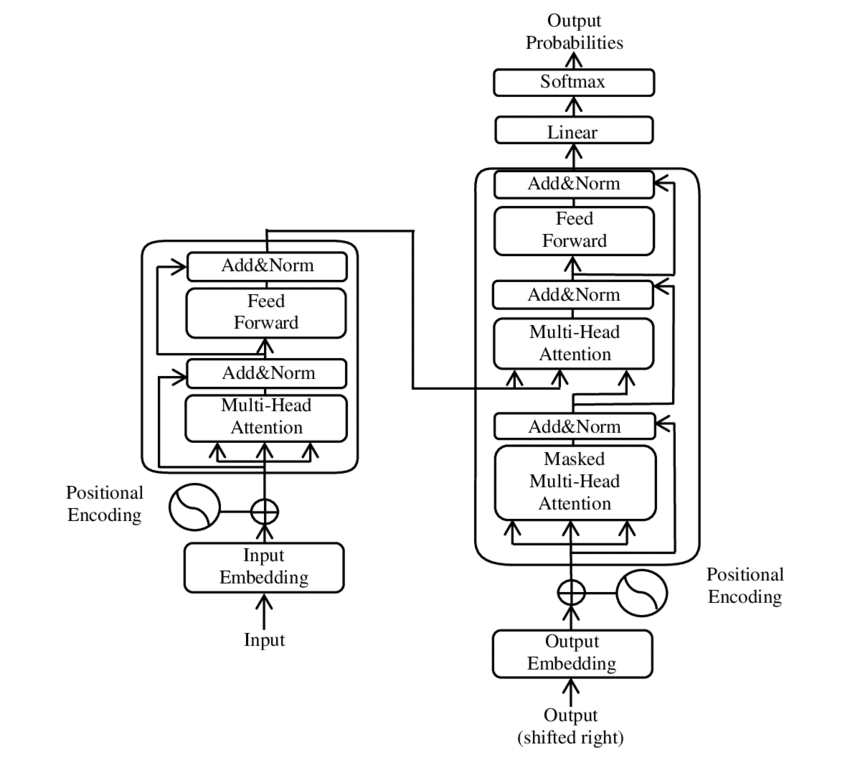

Step 2: The Order of Words (Positional Encoding)

We now have a list of numbers for each word. However, if we mix up the words, the sentence loses its meaning: “The cat sleeps” doesn’t mean the same as “Sleeps the cat.” I tell you nothing new; word order is crucial!

The issue is that our GPT model processes words in parallel (for obvious execution time reasons), thus without considering their order. To solve this, we add extra information to each word’s number list: a position marker.

These position markers (Positional Encoding) are also lists of numbers, calculated with special mathematical formulas.

We won’t go into details here, let’s just note that it works really well!

We simply add them to the word’s Embedding vectors (their own barcode).

Now the model has a list of embedding vectors for each word, which contains information on our whole sentence; each element of this list contains both the information about the word itself and about where it is in the sentence.

Step 3: The Transformer

Nothing to do with Optimus Prime, though…

The smartest part of GPT is the Transformer architecture. Think of it as the brain that analyzes the list of numbers of the sentence to understand the context and predict the next word.

GPT models use a simplified version of the original Transformer, called the Decoder. Why? Because their job is to generate text, and that’s the role of the Decoder!

This Transformer is built by stacking several identical “blocks” on top of each other. The more blocks there are (the more complex it becomes), the more powerful it is.

Each block has several steps to process the embedding vectors of our words:

1. The Principle of Attention

This is the brilliant idea behind the Transformer. When you read a sentence, you don’t give the same importance to all words to understand the meaning. For example, in “The DataScientest student who had studied well passed their exam,” to understand “passed,” you focus on “student” and “exam.”

The Attention mechanism allows our model to do the same: for each word in the sentence, it looks at all previous words and decides which ones are the most important to understand the current word and predict the next!

Often, the Transformer uses several “attention heads” in parallel. It’s like having several people reading the sentence at the same time, each focusing on a different type of relation (one on grammar, one on meaning…), to then combine their analyses.

2. Reflection (Or Feed-Forward)

After the Attention mechanism allows each word to integrate the context of the previous words, each embedding vector independently passes through several layers of mathematical functions that rely on numbers, called weights. This ensemble is called a neural network.

This layer allows the model to perform more complex transformations on the information that Attention has extracted.

Step 4: Rinse and Repeat!

The real power of GPT comes from the fact that it’s not a single Transformer block but several (dozens, even hundreds!) stacked on top of each other.

Imagine a multi-story factory, on each floor, our embedding vectors are processed by the Attention and Reflection mechanisms. The information that comes out of one floor then becomes the input for the floor below.

The first layers learn to handle simple relationships between words.

The intermediate layers combine this information to understand more complex relationships and the structure of sentences. The last layers understand the overall meaning, tone, and style. The information becomes richer as it goes down the floors!

And more and more abstract and incomprehensible to us, poor humans.

Step 5: Training

Imagine we give the machine billions of texts (books, articles, web pages…). We hide the next word in each sentence from it and say: “Guess!”

This is Training.

The model tries to predict the next word based on the previous words.

At first, it makes lots of mistakes, but each time it does, we tell it: “No, the real word was this one,” and the model then adjusts its internal parameters (the weights!) so that the next time it sees a similar situation, it has a better chance of guessing the right word.

This error-based adjustment process is called Gradient Descent.

By playing this prediction game billions of times on billions of texts, the model learns not only which words often go together but also grammar, syntax, and even different writing styles!

Final Step: Text Generation

Once the model is trained, it is ready to generate text, based on an initial prompt to predict associated words, the prompt!

- The model takes your prompt, transforms it into lists of numbers, and sends them through all its Transformer blocks.

- It outputs a list of probabilities for each possible word in the vocabulary. For example, after ‘Once upon a time…,’ the word ‘there was‘ has an 80% chance, ‘once‘ has 10%, ‘the‘ has 5%, ‘in‘ has 3%…

- The model then chooses a word from this list of possibilities. It doesn’t always pick the most probable to ensure the text isn’t too repetitive. This word is added to the sequence: “Once upon a time there was.”

- The model takes this new sequence as input and repeats the process: it predicts the next word (“knight”? “cat”? “day”?), chooses a word, adds it to the sequence…

And it continues, word by word, until it generates a special word that means “end of sentence” or “end of text,” or until it reaches a maximum length!

Conclusion: This Works Really Well!

The power of GPT comes from the combination of several elements:

- The Transformer architecture and the Attention mechanism, which allow it to understand context over very long sentences.

- The layer stacking that allows it to learn increasingly complex representations of language.

- The massive training on gigantic amounts of text, giving it a very broad knowledge of language and the world.

- The word-by-word generation process based on prediction, allowing it to create fluid and varied text.

GPT doesn’t “think”: It is extremely skilled at identifying complex statistical patterns in language and uses them to predict the most likely continuation of a sequence of words.

But the result of this prediction, thanks to the scale of the model and the data used for training, is often text that seems intelligent, relevant, and creative to us!