The ability to understand and explain the decisions taken by Artificial Intelligence or Machine Learning models has become essential. These sophisticated technologies are increasingly being integrated into critical areas such as health, finance and security. Solutions like SHAP (SHapley Additive exPlanations) are booming.

SHAP is inspired by the concepts of cooperative game theory, and offers a rigorous and intuitive method for decomposing model predictions into the individual contributions of each feature.

Theoretical foundations of SHAP

It is necessary to analyse the theoretical roots of SHAP in order to understand its added value, but also the reason why it is effective in interpreting sometimes complex models.

Cooperative game theory

This theory, a branch of game theory, focuses on the analysis of strategies for groups of agents who can form coalitions and share rewards. At the heart of this theory is the notion of ‘fair value’, a way of distributing gains (or losses) among participants in a way that reflects their individual contribution. It is in this context that Shapley’s Value.

Cooperative game theory

This theory, a branch of game theory, focuses on the analysis of strategies for groups of agents who can form coalitions and share rewards. At the heart of this theory is the notion of ‘fair value’, a way of distributing gains (or losses) among participants in a way that reflects their individual contribution. It is in this context that Shapley’s Value.

Shapley Value

This is a mathematical concept, introduced by Lloyd Shapley in 1953, used to determine the fair share of each player in a cooperative game. It is calculated by considering all possible permutations of players and evaluating the marginal impact of each player when he joins a coalition. In other words, it measures the average contribution of a player to the coalition, taking into account all the possible combinations in which that player could contribute.

Link with SHAP

In the context of machine learning, SHAP uses the Shapley Value to assign an “importance value” to each feature of a predictive model. Each feature of a data instance is considered as a “player” in a cooperative game where the “payoff” is the prediction of the model.

How does SHAP work?

At the heart of SHAP is the idea of decomposing a specific prediction into a set of values assigned to each input feature. These values are calculated to reflect the impact of each feature on the difference between the current prediction and the average prediction over the data set. To achieve this, SHAP explores all possible combinations of features and their contributions to the prediction. This process uses the Shapley Value, discussed earlier, to ensure a fair and accurate distribution of impact across features.

Let's take an example

Imagine a predictive model used in the financial sector to assess credit risk. SHAP can reveal how characteristics such as credit score, annual income and repayment history contribute to the model’s final decision. If a customer is refused credit, SHAP can indicate which characteristics most influenced this negative decision, providing relevant indicators.

Using Python

Python provides a library for SHAP. This allows users to take full advantage of SHAP for model interpretability.

Installation

The first step, of course, is to install the library

pip install shap

Once installed, all you need to do is import it into your environment:

import shap

Data preparation

Suppose you have a Machine Learning model that has already been trained, such as a random forest model for classification (RandomForestClassifier). You will then need a dataset for analysis:

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

import pandas as pd

# Load and prepare datas

data = pd.read_csv('my_amazing_dataset.csv')

X = data.drop('target', axis=1)

y = data['target']

# Split train and test set

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Train the model

model = RandomForestClassifier(random_state=42)

model.fit(X_train, y_train)

Analyse SHAP

Once the model has been trained, you can use SHAP to analyse the impact of the characteristics. To continue this example, we can use the Explainer Tree.

# Create Explainer Tree

explainer = shap.TreeExplainer(model)

# Compute SHAP Values on the test set

shap_values = explainer.shap_values(X_test)

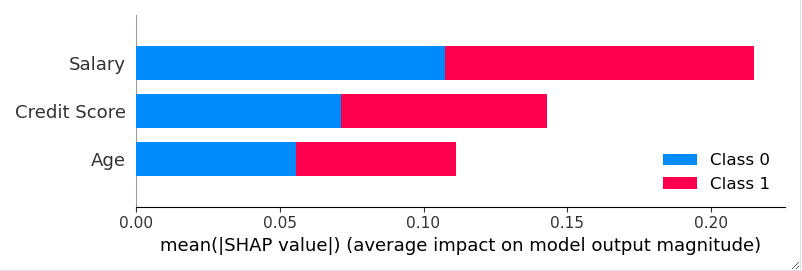

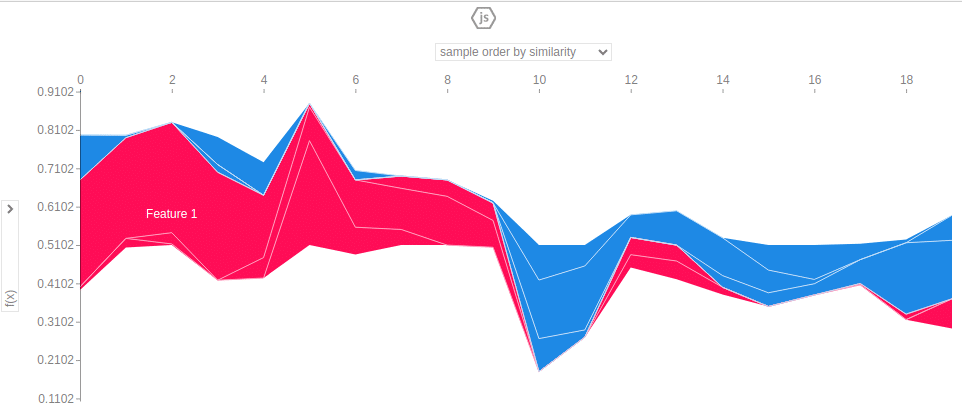

Viewing results

SHAP naturally offers various visualisation options for interpreting the results. For example, for a summary of the importance of :

shap.summary_plot(shap_values, X_test)

It is also possible to examine the impact of a specific characteristic:

# Need to initialize javascript in order to display the plots

shap.initjs()

# View impact

shap.plots.force(explainer.expected_value[0], shap_values[0])

In conclusion

SHAP (SHapley Additive exPlanations) is a powerful and versatile tool for the interpretability of Machine Learning models. Drawing on the principles of cooperative game theory and Shapley Value, it provides a rigorous method for decomposing and understanding the contributions of individual features to a model’s predictions.

The use of the SHAP library in Python demonstrates its ease of integration and implementation in the data science workflow. What’s more, with Artificial Intelligence becoming increasingly ubiquitous, the interpretability of models cannot afford to be underestimated.