Deep learning is as fascinating as it is intimidating. With its equations, GPUs, and esoteric vocabulary, one might think a doctorate in mathematics is necessary to understand its logic. Yet the principle is simple: learn by example. To see it firsthand — literally — nothing beats the TensorFlow Playground.

This small online tool allows you to manipulate a neural network in real time, observe the reactions, and, most importantly, understand how it learns. Just a few minutes can turn an abstract concept into a concrete experience.

Deep learning in brief

For over a decade, deep learning has been prevalent in image recognition, automatic translation, and text synthesis. Yet, the foundational idea dates back to the 1950s: crudely mimicking the functioning of biological neurons. An artificial neuron receives numerical inputs, weights them, optionally adds a bias, and applies an activation function. Positioned in successive layers, these neurons gradually transform raw data into representations capable of separating, predicting, or generating.

Why “deep”? Because modern networks stack dozens, even hundreds of layers, each capturing more subtle abstraction than the previous one: from edges to patterns, from patterns to objects, then from objects to the entire scene. The whole is trained using an optimization method — often gradient descent — that adjusts the weights to minimize an error measured on a sample of annotated examples.

TensorFlow Playground: a browser lab

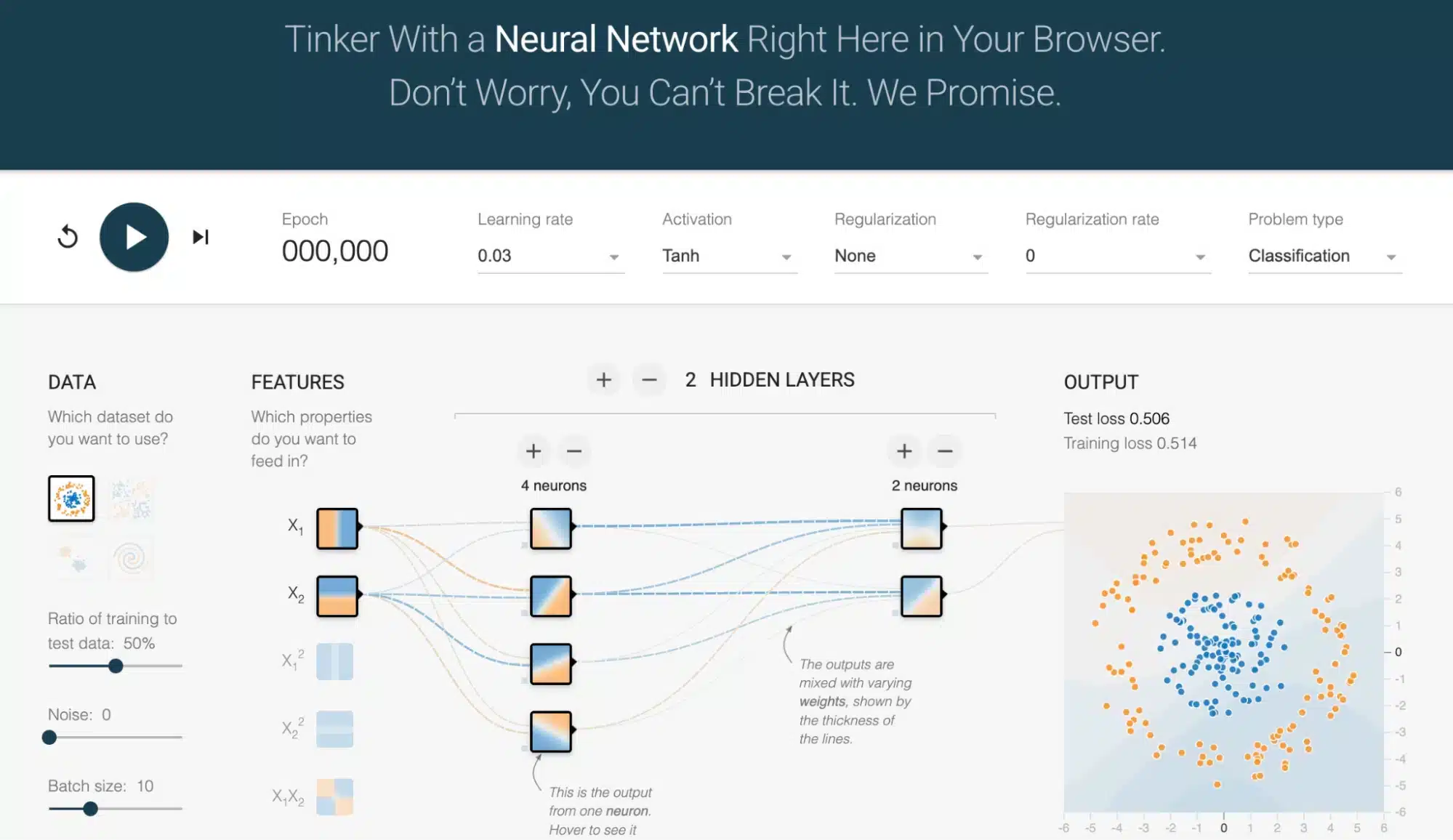

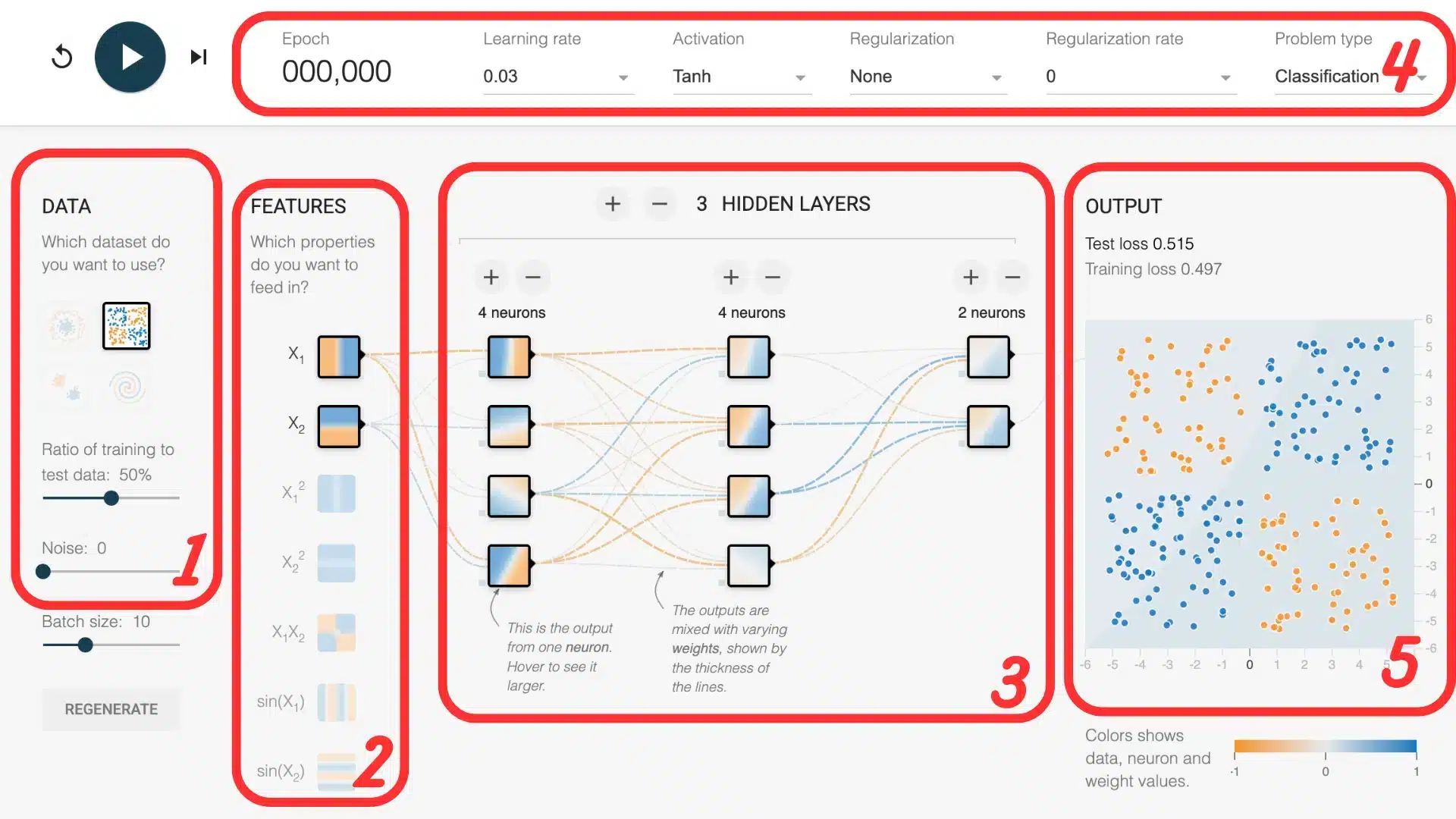

Open TensorFlow Playground and, without installing anything, a minimal network appears. On the left, colored points represent the data; in the center, circles (the neurons) are connected by arrows (the weights); on the right, the hyperparameters can be adjusted with a simple click: learning rate, activation function, regularization, batch size, etc. When you press Train, each iteration updates the decision boundary in real-time.

Why is this tool so powerful for understanding?

- Instant visualization: the boundary evolves before your eyes, illustrating gradient descent far better than a static graph.

- Safety: no risk of erasing a disk or overheating a GPU.

- Easy sharing: all options are encoded in the URL; just copy it to share an exact configuration.

Network anatomy from Playground

1. The datasets

Playground offers four synthetic datasets: a linearly separable cloud, two non-linear sets (circle and “moons”), and the fundamental spiral nicknamed “the snail”. These two-dimensional data are simple enough to fit in a graph, yet rich enough to test the power of a deep network.

2. The features

By default, only the coordinates x and y are used as inputs. However, you can enable other derived features: x², y², x·y, sin(x), or sin(y). These transformations allow the model to better capture complex patterns. For instance, a circular-shaped cloud becomes much easier to separate if you add x² + y² as information: the decision boundary can then become circular, even with a simple network.

3. The architecture

Below the data, a slider lets you add layers and adjust the number of neurons. A network without a hidden layer is equivalent to linear regression: it only resolves linear separations. With one layer of three neurons, the model already captures curves. Three layers of eight neurons tackle the spiral dataset, but increasing depth further risks overfitting — hence the importance of regularization.

4. The hyperparameters

The learning rate controls the magnitude of updates: too large, the loss oscillates; too small, the model stagnates. The activation functions — ReLU, tanh, sigmoid — inject the necessary non-linearity; ReLU often converges faster, tanh sometimes appears more stable. L2 regularization adds a penalty on the weights to prevent the network from memorizing noise.

5. Visualizing results

Once training is initiated, two elements should be monitored: the decision boundary, which evolves visually in the plane, and the loss curve at the bottom right. The boundary shows how the network learns to separate the classes; the more it aligns with the shape of the data, the better the model’s comprehension. The loss curve indicates whether the error decreases — a good sign that learning is advancing.

Two challenges to replicate

All exercise parameters below are already encoded in the links; just click to land on the described configuration.

Challenge 1: First Steps

Link: Challenge – First Steps

Start the training: in a few seconds, the boundary begins to draw a separation into two distinct areas. Then try to reduce the learning rate and observe how the model learns more slowly. Also change the activation function, for example, switching from tanh to ReLU: the speed and shape of convergence may vary, even if the task remains simple. It’s a good first exercise to become accustomed to the parameters without getting lost in complexity.

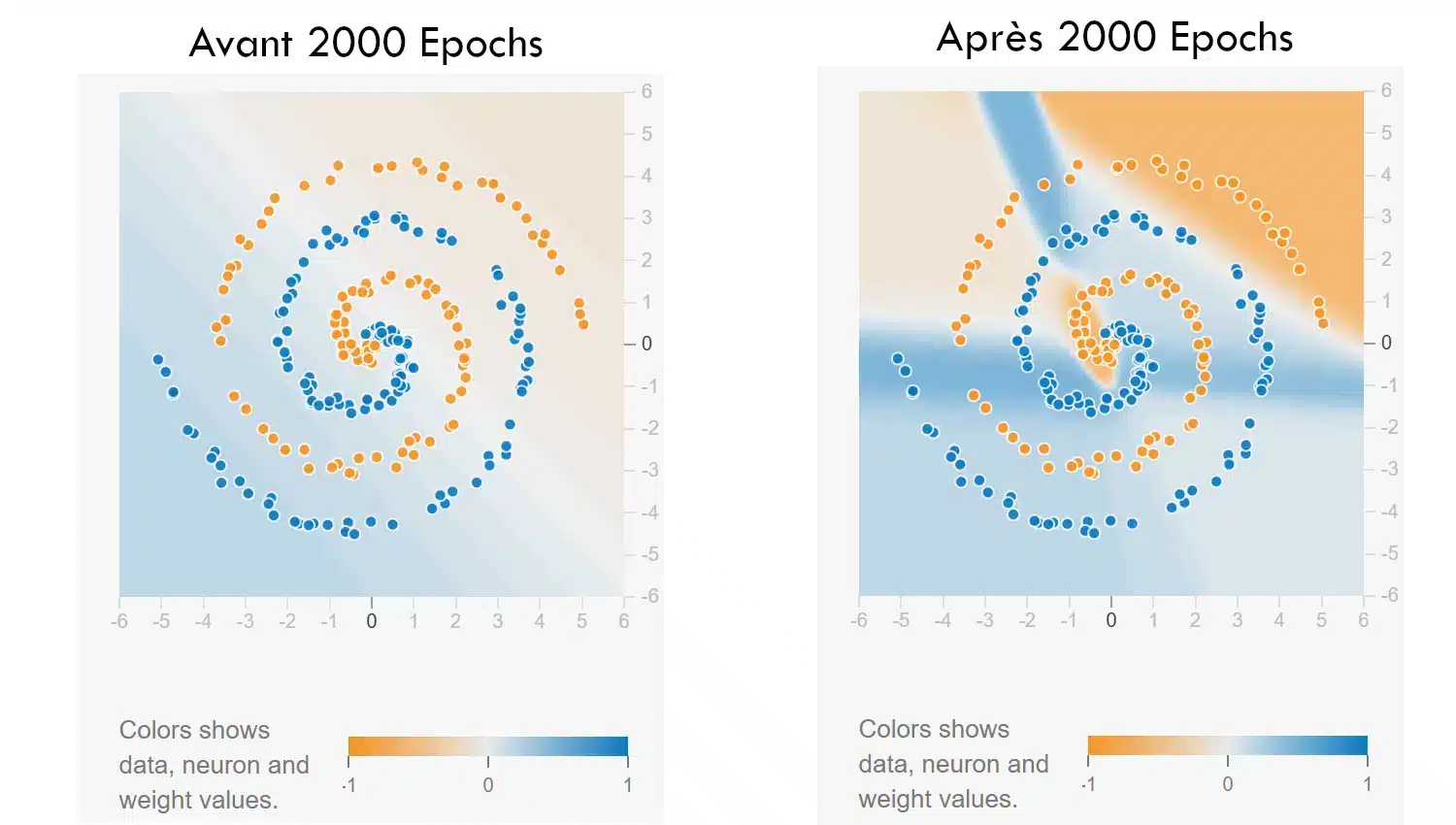

Challenge 2: Spiral

Link: Spiral

In this second exercise, the network must learn to classify a spiral-shaped dataset — a pattern known for its difficulty. The intentionally limited starting configuration (only the features x and y) forces you to experiment with the architecture and hyperparameters to succeed.

Start the training: the boundary is chaotic at first. It’s up to you to find a combination of layers, neurons, activation function, or even regularization, that allows the network to follow the pattern curves. It’s a good way to see how depth or a small parameter change can make a significant difference.

Bonus difficulty: no adding derived features. Everything must rely on the model’s structure.

Insights from the Playground

Spending roughly ten minutes in Playground teaches three fundamental lessons:

- The network learns by adjusting its weights to reduce the error; gradient descent is simply an automated cycle of trial and error.

- Non-linearity — whether through features or activations — is crucial as soon as a straight line isn’t enough.

- Hyperparameters matter: a poor learning rate or an oversized architecture can ruin training as surely as a bug in the code.

These observations are seen, not guessed: the moving image imprints in the mind what three pages of algebra summarize less clearly.

TensorFlow Playground is not intended to produce industrial models, but to visualize the essence of deep learning: the progressive transformation of a data space under the influence of iterative learning. By reducing the subject to colored points and a few buttons, the tool makes the mechanics accessible to anyone with a browser. From there, making the leap to Keras or PyTorch becomes a straightforward interface change. So, open the page, play for a few minutes, adjust a parameter, observe the result, and watch the theory come to life. Machine learning, however complex, always starts with a first click on Train.