Images contain a wealth of important information. While they are easily discernible to our accustomed eyes, they pose a real challenge in data analysis. The collection of techniques used for this purpose is known as “image processing.” In this article, you will explore the classic algorithms, techniques, and tools for processing data in the form of images.

What is Image Processing ?

As the name suggests, image processing involves working with images, which can encompass various techniques. The final outcome may take the form of another image, a variation of the original, or simply a parameter of the image. This result can then be utilized for in-depth analysis or decision-making.

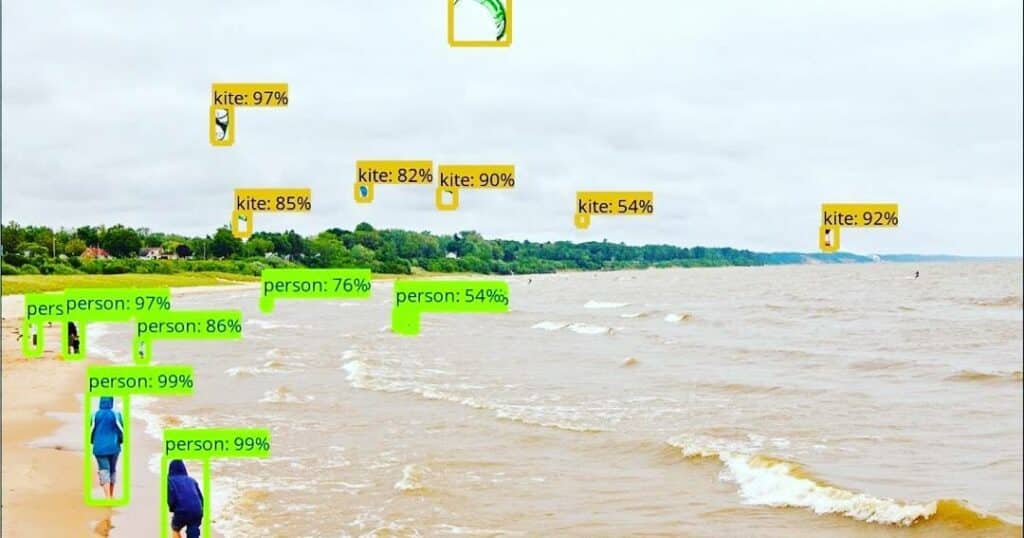

It constitutes the central part of computer vision, playing a crucial role in numerous real-world applications such as robotics, autonomous vehicles, and object detection. Image processing enables us to transform and manipulate thousands of images simultaneously, extracting valuable information from them.

But what is an image?

An image can be represented as a 2D function, F(x, y), where x and y are spatial coordinates. Essentially, it’s a grid of pixels arranged in rows and columns. The value of F at a point x, y is known as the intensity of the image at that point. When x, y, and the amplitude value are finite, it’s referred to as a digital image.

An image can also be represented in 3D, with coordinates x, y, and z. Pixels are then arranged in the form of a matrix. This is what we call an RGB image, where R stands for red, G for green, and B for blue. In the case of grayscale images, there’s only one channel, and z equals 1.

Classical image processing techniques

Historically, images were processed using mathematical analysis methods, and here are a few of them:

- Gaussian Blur: Gaussian blur, or Gaussian smoothing, is the result of applying a Gaussian function to an image, which is essentially a matrix as defined earlier. It is used to reduce image noise and soften details. The visual effect of this blurring technique is akin to looking at an image through a translucent screen. It is sometimes used as a data augmentation technique for deep learning, which we will discuss in more detail below.

- Fourier Transform: The Fourier transform decomposes an image into sinusoidal components. It has multiple applications, including image reconstruction, image compression, and image filtering. Since we’re discussing images, we’ll consider the discrete Fourier transform.

Consider a sine wave; it comprises three elements:

- Amplitude – related to contrast

- Spatial frequency – related to brightness

- Phase – related to color information

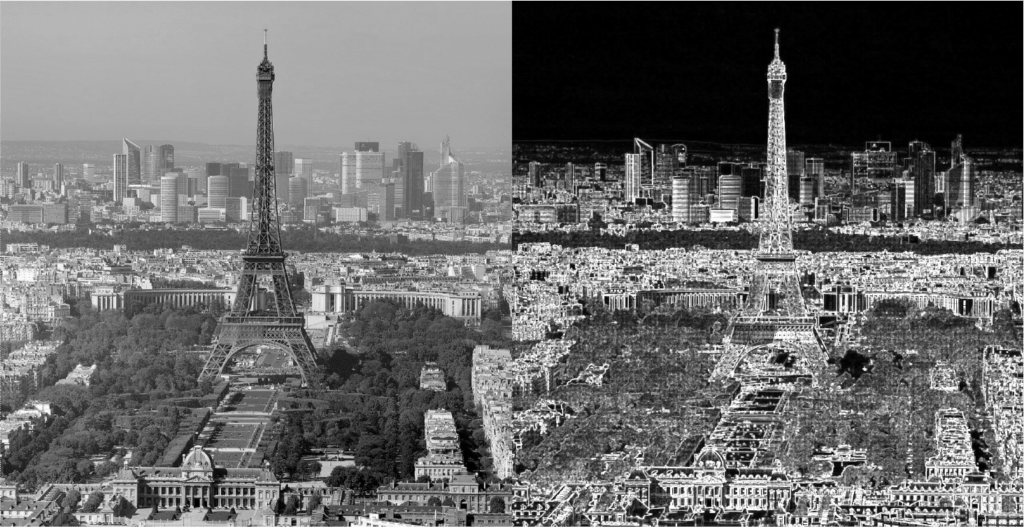

- Edge Detection: Edge detection is an image processing technique used to find the boundaries of objects in images. It works by detecting discontinuities in brightness. More precisely, edges are defined as local maxima of the image gradient, meaning areas where there is a significant change in pixel values. The most common edge detection algorithm is the Sobel operator.

- These historical mathematical analysis methods have laid the foundation for modern image processing and computer vision techniques, enabling a wide range of applications in fields like image recognition, medical imaging, and more.

Image processing with neural networks

The emergence of neural networks and increased computational power has revolutionized image processing. In particular, convolutional neural networks (CNNs), inspired by the previously mentioned techniques, excel in object and person detection and recognition. How do they work?

A Convolutional Neural Network, or CNN for short, consists of three main types of layers:

1. Convolutional Layer (CONV): This layer is responsible for performing the convolution operation. The element involved in the convolution operation is called the kernel/filter (matrix). The kernel performs horizontal and vertical shifts until the entire image is traversed. Its operation is similar to edge detection techniques.

2. Pooling Layer (POOL): This layer is responsible for reducing dimensionality. It helps decrease the computational power required for data processing. There are two types of pooling: Max pooling and Average pooling. Max pooling returns the maximum value from the area covered by the kernel in the image, while average pooling returns the average of all values in the part of the image covered by the kernel.

3. Fully Connected Layer (FC): The fully connected layer is present at the end of CNN architectures. It’s similar to a traditional layer in neural networks and, after applying an activation function, produces the network’s expected output, such as classification.

The addition of non-linear functions (e.g., ReLU – Rectified Linear Unit) within networks or specific architectures allows CNNs to address more complex problems. There are many examples of CNN architectures, including DenseNet, U-Net, and VGG, each tailored to specific tasks and challenges in image processing. These architectures, along with techniques like transfer learning, have significantly improved the accuracy and efficiency of image recognition and analysis.

Generative Adversarial Networks

Labelled data is sometimes too scarce for training complex networks. Generative Adversarial Networks, known as GANs, are particularly popular for this purpose today.

GANs, or Generative Adversarial Networks, consist of two models: the Generator and the Discriminator. The Generator learns to create fake images that appear realistic to deceive the Discriminator, while the Discriminator learns to distinguish fake images from real ones.

In the initial phase, the Generator is not allowed to see real images, which can lead to subpar results. Meanwhile, the Discriminator is exposed to real images mixed with fake ones produced by the Generator and must classify them as real or fake.

To introduce diversity, some noise is added to the Generator, ensuring it generates different examples each time rather than the same type of image repeatedly. Based on the scores predicted by the Discriminator, the Generator strives to improve its results. Over time, the Generator becomes capable of producing images that are increasingly difficult to distinguish, and the Discriminator also improves as it receives more realistic images from the Generator with each iteration.

Popular types of GANs include Deep Convolutional GANs (DCGAN), Conditional GANs (cGAN), StyleGAN, CycleGAN, and more.

GANs are exceptionally well-suited for image generation and manipulation tasks such as aging faces, blending photos, super-resolution, photo painting, and clothing translation.

Conclusion

There are numerous image processing techniques available today, and neural networks play a pivotal role in extracting detailed information and achieving precise conclusions. Python is the preferred language for such tasks, and it offers a range of tools such as OpenCV, Scikit-Image, TensorFlow, and PyTorch.

The training programs offered by DataScientest provide a wealth of tools and resources to learn how to process image data and harness the power of even the most complex neural networks.