For years, AI has been attracting growing interest with the performance achieved through machine learning (Machine Learning) and, more particularly, deep learning (Deep Learning).

These technologies have played a decisive role in the evolution of natural language processing (NLP). They have helped automate the most common NLP tasks, such as word and sentence classification, text generation, response extraction, and speech and vision recognition.

Today, there are several open source libraries containing pre-trained models, enabling data scientists and enterprises to use existing models to save computational costs and time. Hugging Face is one of them.

About Hugging Face Transformers

Hugging Face transformers is a platform for advancing AI by sharing model collections and datasets, enabling users to manage, share and develop their AI models.

It is an open-source Python-based deep learning library that provides a wide variety of pre-trained models for different types of NLP tasks. It provides an API for use in many pre-trained transformer architectures, such as BERT, GPT-2, DistilBERT, BART or GPT-3, enabling very good results to be obtained on a variety of NLP tasks such as text classification, named entity detection, text generation, etc.

Hugging Face‘s pre-trained transformers can be grouped into three categories:

- Autoregressive transformers (GPT-type architecture): focused on predicting the next word in the sentence, they are suitable for generative tasks such as text generation.

- Auto-encoding transformers (BERT-type architecture): more suitable for tasks requiring complete understanding of the input. Examples include sentence classification, named entity recognition and, more generally, word classification.

- Sequence-to-sequence transformers (BART/T5 architecture): best suited to tasks involving the generation of new sentences based on a given input, such as text summarization, translation or question-and-answer generation.

In addition to the official pre-trained models, there are hundreds of sentence-transformer models on the Hugging Face Hub.

What's the point of these Hugging Face Transformers?

The aim of Transformers is to facilitate the development of machine learning projects by providing APIs and tools for downloading and training pre-trained models. Using these models can reduce computational costs, and save the time and resources needed to train a model from scratch.

They have rapidly become the dominant architecture for obtaining reliable results on a variety of NLP tasks.

These models support common tasks in different modalities:

- Text: For everything from classification, information extraction and question answering, to the generation, creation and translation of text in over 100 different languages.

- Speech: For tasks such as audio classification of objects and speech recognition.

- Vision: For object detection, image classification and segmentation.

- Tabular data: For regression and classification problems.

Transformers also support framework interoperability between PyTorch, TensorFlow and JAX.

This makes it possible to use a different framework at each stage of a model’s life: form a model in three lines of code in one framework, and load it for inference in another.

Setting up the Transformers

In this section, we’ll put Transformers into practice by learning about the pipeline tool, and then show how this tool facilitates the implementation of NLP and computer vision tasks.

Pipelines

Pipelines are a simple and effective way of extracting much of the complex code from the library providing an API for performing inference on a variety of tasks, including named entity recognition, sentiment analysis, question answering and so on.

They are used to encapsulate the overall process of each NLP task such as text cleaning, tokenization, embedding, etc.

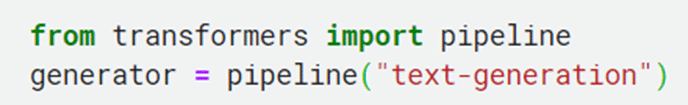

It’s a simple function to use: a pipeline is instantiated via the pipeline() method. Simply specify the task to be launched.

The following is a non-exhaustive list of the pipelines available in the Hugging Face Transformers library:

- feature-extraction (to obtain a vector representation of text)

- fill-mask (to predict masked words)

- ner (named entity recognition)

- question-answering (to answer questions)

- sentiment-analysis (to analyze feelings)

- summarization (to summarize text)

- text-generation (to produce new text)

- translation (to translate a text)

- zero-shot-classification (to classify new examples from unpublished classes)

Text generation

Nowadays, text generation has become a trend with the release and evolution of ChatGPT. In this context, the pipeline enables us to use advanced text generation models in a single line of code.

In the line above, we specify the use case we want (text generation), and it will automatically download the default model (in this case GPT-2). We can use other versions by specifying the ‘model’ parameter.

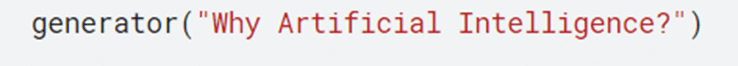

Now let’s start generating the text by passing a phrase as a parameter.

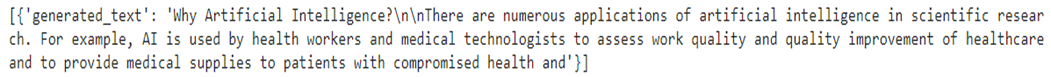

In this example, we asked the model “Why artificial intelligence? “.

The model has generated a response in the ‘generated_text’ key, and we can generate another response by executing the code one more time. This example shows how Transformers can help us implement a chatbot in a very short time.

Image classification

Image classification is a Machine Learning problem. With the Transformers API, it’s easy to acquire the model for this problem.

In one line, we acquired the Image Classifier template. We could easily pass an image file from any source to identify the image with this template.

For example, we’ll pass the image link below and let the template classify it.

The Pipeline helps us do this in a single line of code. The model proposes five classifications, each associated with a probability. The first has the highest score, so we can take “sports car” as our main prediction, and it’s indeed right.

This example proves that we can implement a sophisticated model in a short space of time and without a great deal of AI knowledge.

Model specification in a pipeline

In the previous examples we have used the default model for the task in question, but it is also possible to choose a particular Hub model and use it in a pipeline for a specific task.

Conclusion

Today, Hugging Face Transformers is the most widely adopted software library by beginners and professionals alike for machine learning models to handle NLP tasks. It provides the user with a pre-trained model that was previously inaccessible due to technical requirements.