As AI projects grow increasingly intricate, the necessity to transfer and execute models across varied environments has become crucial. The current landscape is filled with a multitude of development frameworks, each with its own characteristics and proprietary formats. Within this setting, ONNX (Open Neural Network Exchange) emerges as a standardized and open-source solution to ensure interoperability between these different environments.

What is ONNX?

ONNX is an open format designed to represent machine learning and deep learning models independently of frameworks. It enables developers to export a trained model from one environment (e.g., PyTorch or TensorFlow) and execute it in another, using a compatible inference engine like ONNX Runtime, TensorRT, or OpenVINO.

Originally developed by Facebook and Microsoft in 2017, ONNX is now endorsed by a broad industrial (IBM, Intel, AMD, Qualcomm, etc.) and academic community. This open-source standard promotes model reuse, speeds up deployment in production, and enhances the portability and agility of AI systems.

Architecture and Technical Components

The ONNX standard is structured on three fundamental principles:

- Extensible computation graph

Each model is represented as a directed acyclic graph (DAG), where nodes correspond to operations and edges to data flows, shaping the mathematical transformations applied to the inputs. - Standard operators

ONNX defines a set of operators (convolution, normalization, activation, etc.) that are compatible across frameworks. These operators ensure a predictable behavior of transferred models without the need to retrain them. - Normalized data types

The format supports standard types (float, int, multi-dimensional tensor, etc.), ensuring fine compatibility with execution engines.

Mainly focused on the inference phase (evaluating already trained models), ONNX optimizes performance without enforcing constraints on training.

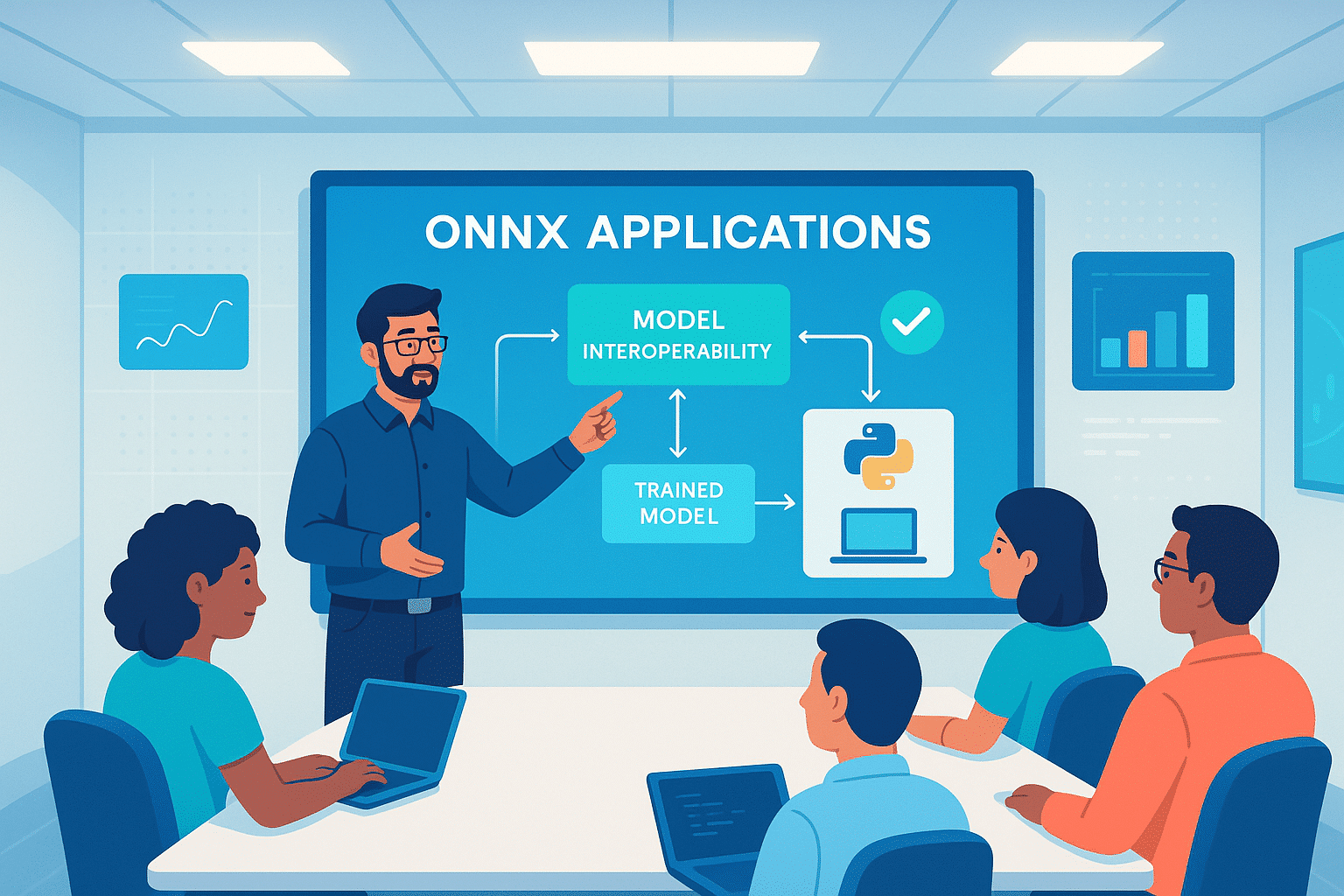

This diagram synthesizes the central role of ONNX as a portability intermediary between AI model training and their deployment on various execution environments.

1. Left Section – Training

The model is initially designed and trained using one of the primary machine learning or deep learning frameworks. ONNX allows these models to be exported in a unified format, thereby facilitating their reuse and deployment across other platforms:

- PyTorch: widely utilized in research and academic environments, PyTorch is favored for its flexibility, dynamic execution (eager mode), and clear API, making it the preferred tool for rapid prototyping and experimentation.

- TensorFlow: extensively used in the industry, TensorFlow provides robust infrastructure for large-scale deployment, distributed computing, and optimization on various hardware, notably GPUs and TPUs.

- scikit-learn: a staple for classical machine learning models (regression, decision trees, SVM…), scikit-learn is frequently used in preprocessing or in pipelines combining statistics and supervised learning.

This combination of PyTorch / TensorFlow / scikit-learn covers a vast majority of modern AI use cases, from exploratory prototyping to industrial-scale production deployment. ONNX serves here as a bridge connecting these ecosystems.

2. Center – ONNX Format

The central ONNX block in the diagram embodies a critical technological convergence point. It functions as a universal abstraction layer, encapsulating the model in a format that is independent of any specific framework. This portability hinges on three key elements: a DAG-structured computation graph for optimized execution, a set of standardized operators ensuring coherent semantics, and formalized data types guaranteeing hardware compatibility. As a result, ONNX provides an interoperable and agnostic representation, ready for deployment on a wide range of platforms.

3. Right Section – Multi-platform Execution

Once exported, the ONNX model can be deployed in the cloud, locally, at the edge, or on mobile devices. It operates with optimized inference engines like ONNX Runtime, TensorRT, or OpenVINO, and seamlessly integrates into applications developed in various languages, such as Python, C++, Java, or JavaScript. This decoupling between training and execution provides maximum flexibility while maintaining high performance thanks to optimizations specific to each backend.

ONNX Runtime: Optimized Execution Engine

ONNX Runtime is the official execution engine for ONNX models, designed for performance with optimizations tailored to hardware architectures (CPU, GPU, NPU), versatile with multi-platform compatibility (Windows, Linux, macOS, Android, iOS, web), and multilingual; accessible via Python, C++, C#, Java, among others. It enables quick inferences with a low memory footprint, making it particularly well-suited for production environments and embedded devices.

Industrial Use Cases

ONNX offers several practical advantages, including interoperability between data science and engineering teams. It permits researchers to develop models in PyTorch, while the product team can easily integrate them into optimized backends.

It also facilitates cross-platform deployment, allowing the same model to run on diverse platforms like Azure, AWS, Android, or even in connected vehicles.

Finally, ONNX enables independent assessment of AI models on different inference engines, ensuring their robustness, stability, and accuracy.

Conclusion

ONNX has become a technical cornerstone of AI interoperability. Thanks to its standardized format, it simplifies the transition from research to production, reduces dependence on proprietary tools, and encourages large-scale model reuse.

In a context where AI architectures are swiftly evolving, ONNX signifies a strategic technological investment for any organization aiming to industrialize its AI solutions efficiently.