What is a pipeline ?

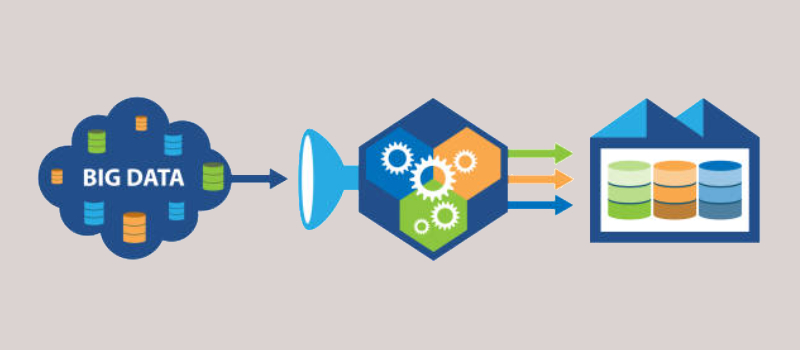

A pipeline is a set of processes and tools used to collect raw data from multiple sources, analyze it and present the results in an understandable format. Companies use data pipelines to answer specific business questions and make strategic decisions based on real data. All available data sets (internal or external) are analyzed to obtain this information.

For example, your sales team wants to set realistic targets for the next quarter. The pipeline enables them to gather data from customer surveys or feedback, order history, industry trends, etc. Robust analysis tools will then enable an in-depth study of the data, identifying key trends and patterns. Teams can then create specific, data-driven objectives that will increase sales.

Data Science pipeline vs. ETL pipeline

Although the terms “Data Science pipelines” and “ETL pipelines” both refer to the process of transferring data from one system to another, there are key differences between the two:

The ETL pipeline stops when the data is loaded into a data warehouse or database. The Data Science pipeline does not stop at this stage and includes additional steps such as Feature Engineering or Machine Learning.

ETL pipelines always involve a data transformation step (ETL stands for Extract Transform Load), unlike Data Science pipelines, where most of the steps are carried out with raw data.

Data Science pipelines generally run in real-time, whereas ETL pipelines transfer data in blocks or at regular intervals.

Why is the Data Science pipeline important?

Companies create billions of pieces of data every day, and each of these contains valuable information. The Data Science pipeline makes the most of this information by bringing together data from all teams, cleansing it, and presenting it in an easily digestible way. This enables you to make rapid, data-driven decisions.

Data Science pipelines enable you to avoid the tedious and error-prone process of manual data collection. By using intelligent data ingestion tools (such as Talend or Fivetran), you’ll have constant access to clean, reliable, and up-to-date data, essential for staying ahead of the competition.

Benefits of Data Science Pipelines

- Increase agility to meet changing business needs and customer preferences.

- Simplify access to company and customer information.

- Accelerate decision-making.

- Eliminate data silos and bottlenecks that delay action and waste resources.

- Simplify and accelerate the data analysis process.

How does a Data Science pipeline work?

Before moving raw data into the pipeline, it’s essential to identify the specific questions you want the data to answer. This helps users to focus on the data of interest in order to obtain the appropriate information.

The Data Science pipeline is made up of several stages, including :

Obtaining the data

This is where data from internal, external, and third-party sources is collected and transformed into a usable format (XML, JSON, .csv, etc.).

Data cleansing

This is the most time-consuming stage of the process. Data may contain anomalies such as duplicate parameters, missing values, or irrelevant information, which need to be cleaned up before creating a data visualization.

This step can be divided into two categories:

- Examination of the data to identify errors, missing values, or corrupted records.

- Data cleansing, which involves filling in gaps, correcting errors, removing duplicates, and deleting irrelevant records or information.

Data exploration and modeling

Once data has been thoroughly cleansed, it can then be used to identify patterns. This is where Machine Learning tools come in. These tools will help you find patterns and apply specific rules to data or data models. These rules can then be tested on sample data to determine how performance, revenue, or growth would be affected.

Data interpretation

The aim of this step is first to identify the information and correlate it with the results in your data. You can then communicate your results to business leaders or colleagues using graphs, dashboards, or reports.

Data review

As business requirements change, or as more data becomes available, it’s important to review your model periodically and make revisions as necessary.

Conclusion

In this article, we have described the use of pipelines in the Data industry. As a Data Engineer or Analytics Engineer, the creation and maintenance of data pipelines are necessary to guarantee their quality and availability for the creation of Machine Learning models, or in a Business Intelligence approach.