Apache Oozie is an open-source workflow scheduling tool originally developed by Yahoo! in 2007 to facilitate the coordination of Hadoop jobs. Oozie was accepted as an Apache Incubator project in 2011, and promoted to an Apache Top-Level project in 2012.

Since its inception, the project has continued to improve and have new features added. Its version 5.0.0, released in 2017, was a major evolution offering many improvements, including support for long-term planning, and support for SSL certificates.

Apache Oozie is now widely used in many companies for coordinating Hadoop jobs and workflows.

How does Apache Oozie work?

Apache Oozie is a scheduling system designed to manage and execute Hadoop jobs in a distributed environment. You can create pipelines by combining different jobs, such as Hive, MapReduce or Pig.

As an open-source Java web application, Oozie is responsible for triggering your various workflows. Task completion is detected using a callback and polling principle. When Oozie starts a task, it automatically provides the task with a unique HTTP callback URL, and notifies this URL as soon as the task is completed. In the event that the task fails to invoke the callback URL, Oozie can poll the task to check whether it has completed.

Apache Oozie has 3 job types:

Workflow Oozie

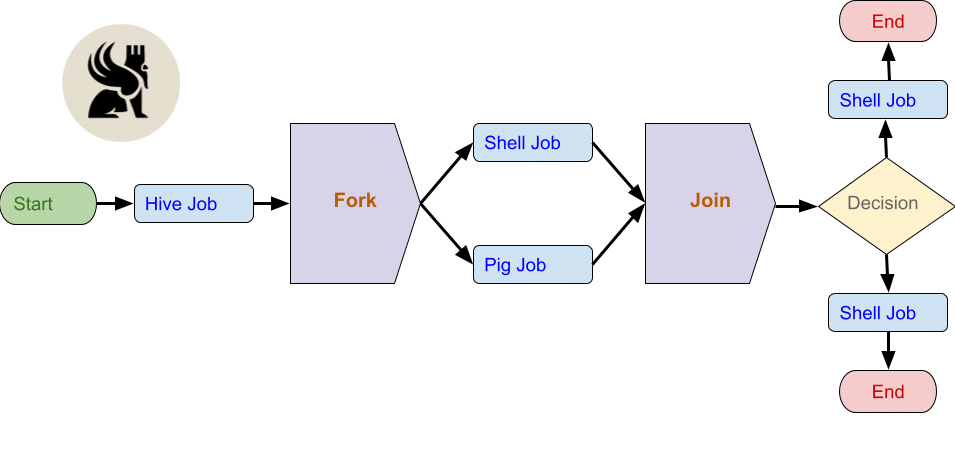

An Apache Oozie workflow is a sequence of actions organized as a directed acyclic graph (DAG). These actions depend on each other, so that the next action can only be executed after the previous action has been completed.

Different types of actions can be created as required. The workflow and any scripts or .jar files must be positioned in the HDFS path before executing the workflow.

If we want to run several jobs in parallel, we can use Fork. For each use of Fork, a join must be used at the end of the Fork. Join assumes that all nodes running in parallel are children of a single Fork, as shown in the following diagram.

Oozie Coordinator

The Apache Oozie coordinator lets you schedule complex workflows. It triggers these workflows according to time, data or event predicates. In this way, workflows start as soon as the given condition is met.

The definitions required for coordination workflows are as follows:

- start: Date and time of job start

- end : Job end date and time

- timezone: Time zone of the coordination application

- frequency: In minutes, the frequency at which work is to be carried out

Some additional properties are also available for control information:

- timeout: the maximum time, in minutes, that an action will wait to satisfy conditions, before being rejected

- concurrency: the maximum number of actions that can run in parallel

- execution: Order of execution, among FIFO, LIFO and LAST_ONLY

Oozie Bundle

Oozie bundles are not, strictly speaking, a type of job. They are a grouping of several coordinator or workflow tasks. Bundles thus generate their own life cycle.

Conclusion

Apache Oozie is a powerful and flexible tool for the efficient, coordinated management of Hadoop jobs and workflows. Thanks to the advanced features discussed in this article, Oozie has become a must-have for distributed data analysis.

If you or your customer are looking for an efficient way to manage your Hadoop workflows, Apache Oozie is a tool to seriously consider.