Lately, the web has been inundated with photos and videos associated with the word DeepFake, but what is it really?

DeepFake is derived from the combination of “Deep Learning” and “Fake.” It involves synthesizing images or videos from entirely different images and videos to create an entirely new scene without the deception being easily detected.

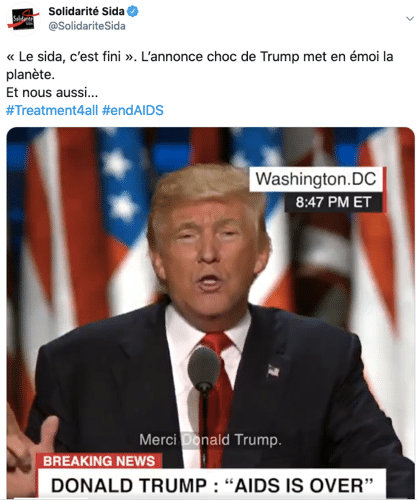

It becomes evident quickly that this tool can be dangerous, especially in the era of social media, where massive disinformation is prevalent. One notable example is the campaign against AIDS conducted a few months ago by Solidarité Sida, which included a DeepFake video of the former U.S. President Donald Trump “announcing” the end of AIDS.

How does it work?

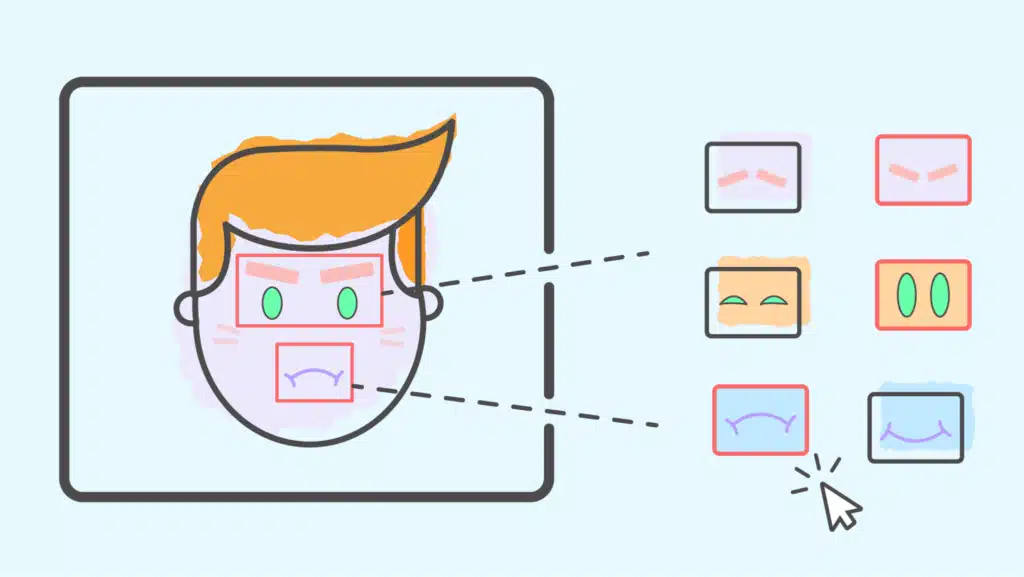

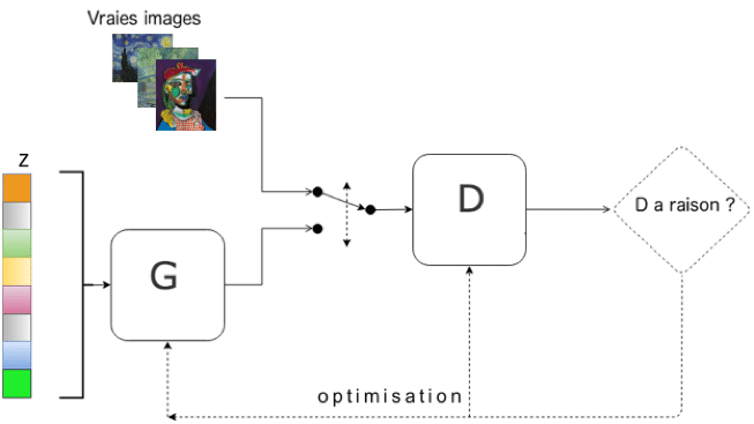

The DeepFake algorithms are based on Generative Adversarial Networks (GANs).

In broad terms, this is a two-step learning model: a first neural network generates an object (an image, a video, a sound…), and a second network is responsible for determining whether this object is generated by the first one or not, all with a database of images similar to those desired as ‘fuel’ for the algorithm.

A GAN is thus composed of two neural networks: a generator (G in the diagram) and a discriminator (D in the diagram), whose antagonistic interactions contribute to mutual training. For more details on the overall functioning of neural networks, please refer to our articles on Deep Learning and Style Transfer.

On the road to democratization / How to do it?

In recent months, it’s no longer necessary to have a master’s degree in Data Science from Stanford to be able to produce your own DeepFakes, which may or may not be easily detectable to the naked eye.

One can mention the Chinese application Zao, which allows users to superimpose a face onto a video sequence.

It’s fun to use, but you have to give up the rights to the photos you take…

But also the most popular on the networks: the First-Order-Motion-Model, a package developed by Aliaksandr Siarohin, Stéphane Lathuilière, Sergey Tulyakov, Elisa Ricci and Nicu Sebe, enabling DeepFake to create disturbingly realistic photos and videos with little effort, since a collaborative environment has been made available by the creators on Google Collab, enabling the program to be launched without any technical prerequisites!

Did you like this article? Want to learn more about Deep Learning? Discover our dedicated dossier or get started quickly on a Data Science training course with DataScientest!