Have you ever wished you could paint like Van Gogh? Reproduce Monet's aesthetic in modern landscapes?

In this article, we'll introduce you to a technique using Deep Learning to apply the style of another to an original image. This optimization technique is known as neural style transfer, first described in Leon A. Gatys, A Neural Algorithm of Artistic Style.

What is style transfer?

Style transfer is one of the most creative applications of Convolutional Neural Networks (to find out more about CNNs, we recently published an article on the subject).

It allows you to recover the style of an image and restore it to any other image. It’s an interesting technique that highlights the capabilities and internal representations of neural networks. It may also prove useful in certain scientific fields for the augmentation or simulation of image data.

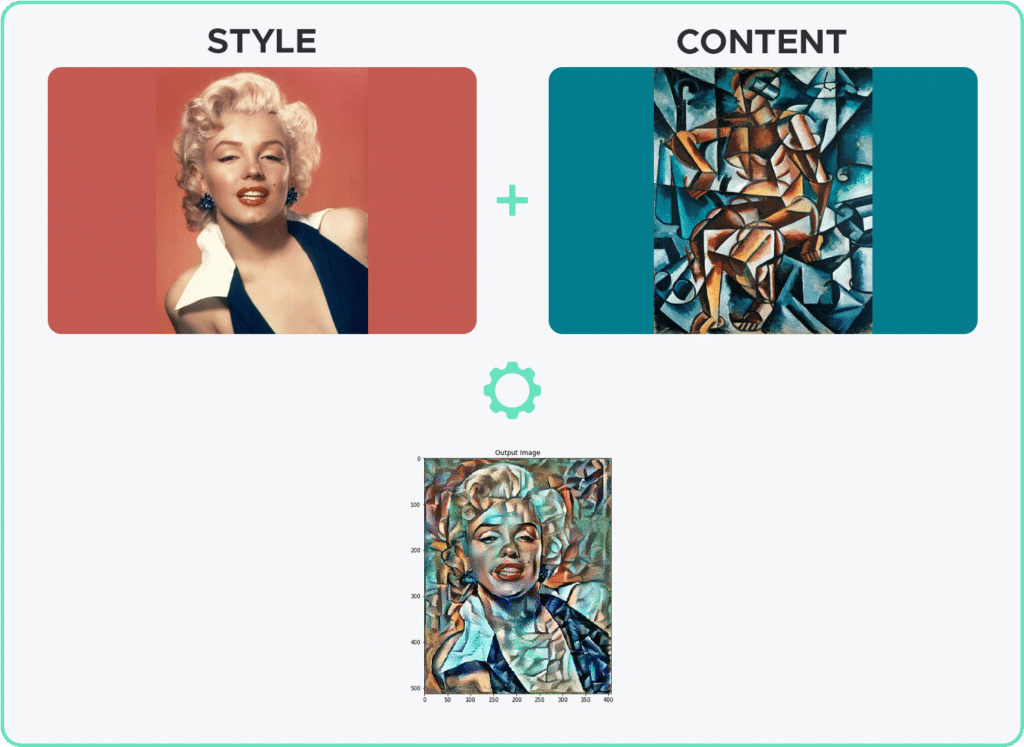

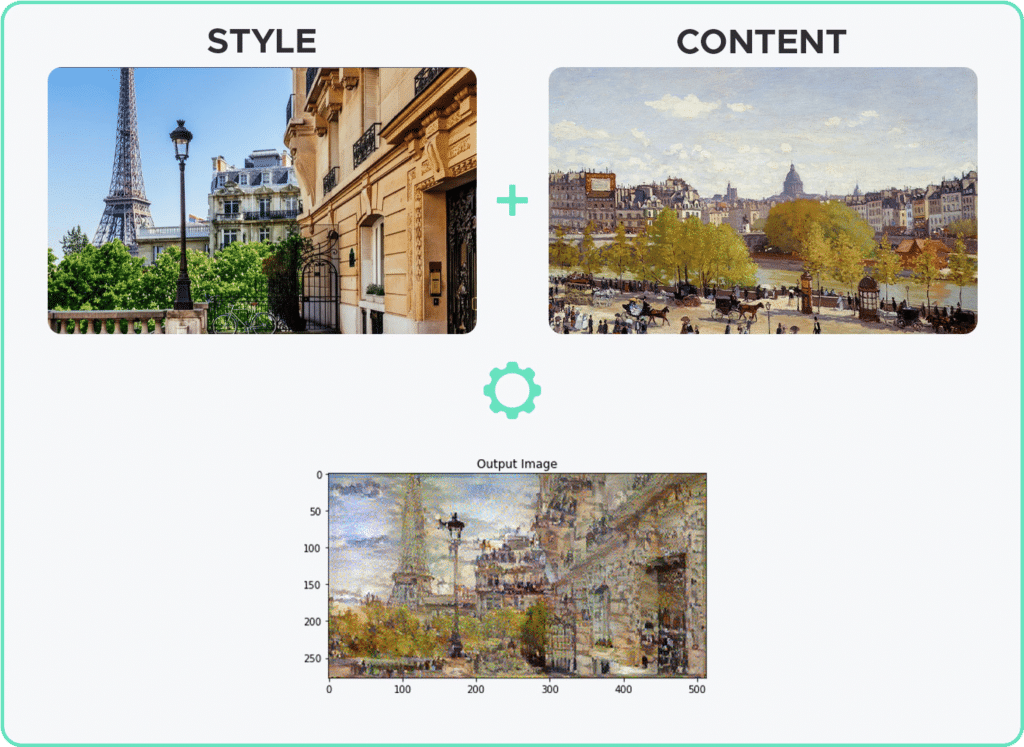

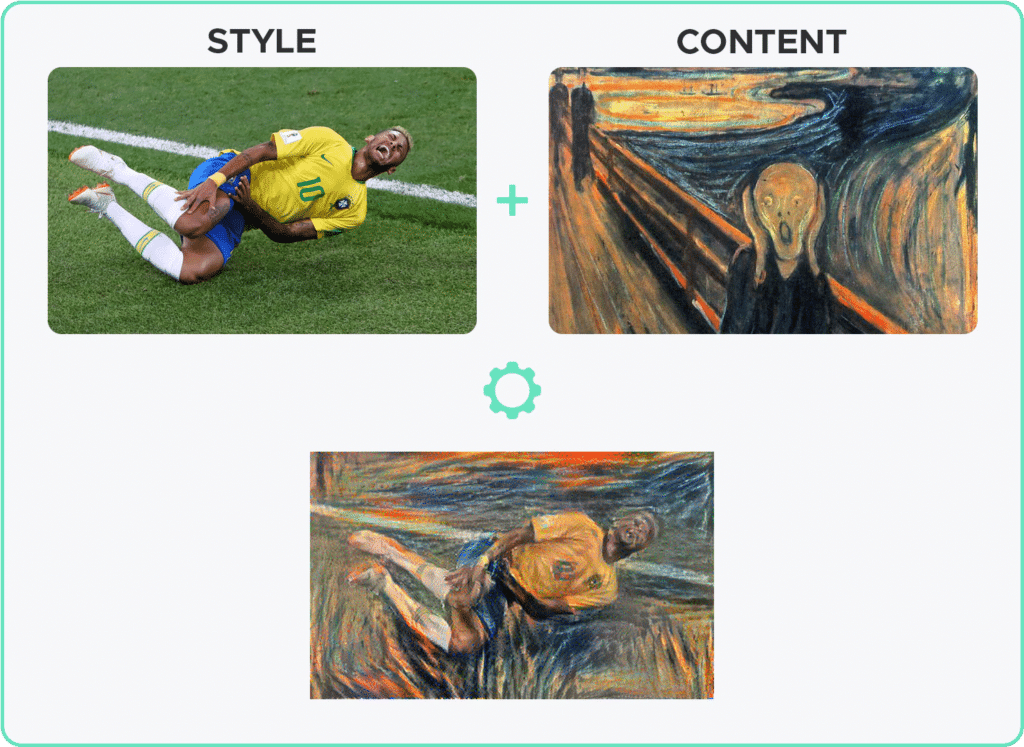

The almost infinite combinations of possible contents and styles bring out unique and ever more creative results in neural network enthusiasts, and sometimes even true masterpieces.

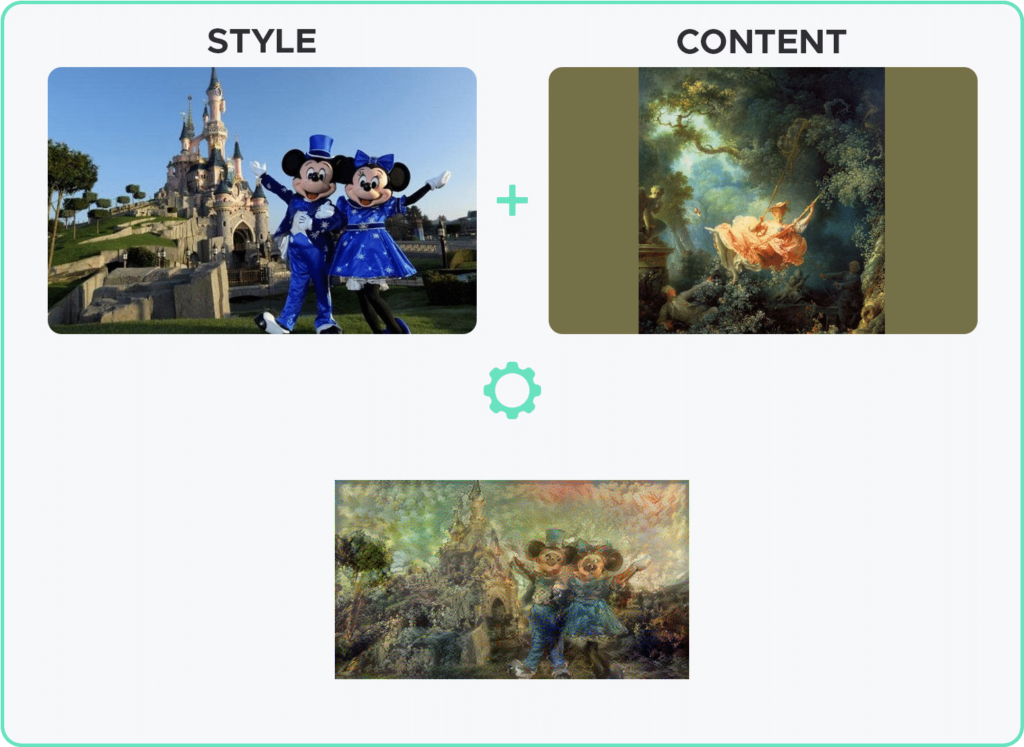

To work, it is necessary to choose a reference image for content and a reference image for style (such as a famous painter’s work of art). A third image, initialized with the content image, is then gradually optimized until it resembles the content image, but “painted” in the style of the second image.

The principle of style transfer mainly consists in defining two distance functions:

- one that describes the difference between the content of two images

- the other describes the difference between two images in terms of their styles.

We then simply minimize these two distances, using a backpropagation technique to obtain, after optimization, an image that matches the content of the content image and the style of the style image.

The distances we use are described below and are calculated from images extracted from intermediate layers of the neural network.

An impressive aspect of this technique is that no new neural network training is required – the use of pre-trained networks such as VGG19 suffices and works amply. If you’re wondering how it works, take a look at our article on Transfer Learning.

In this article, I present a concrete example of style transfer using the Tensorflow library.

The approach used here imitates as closely as possible the methods used in the original paper. The complete code for this article is available at the following address.

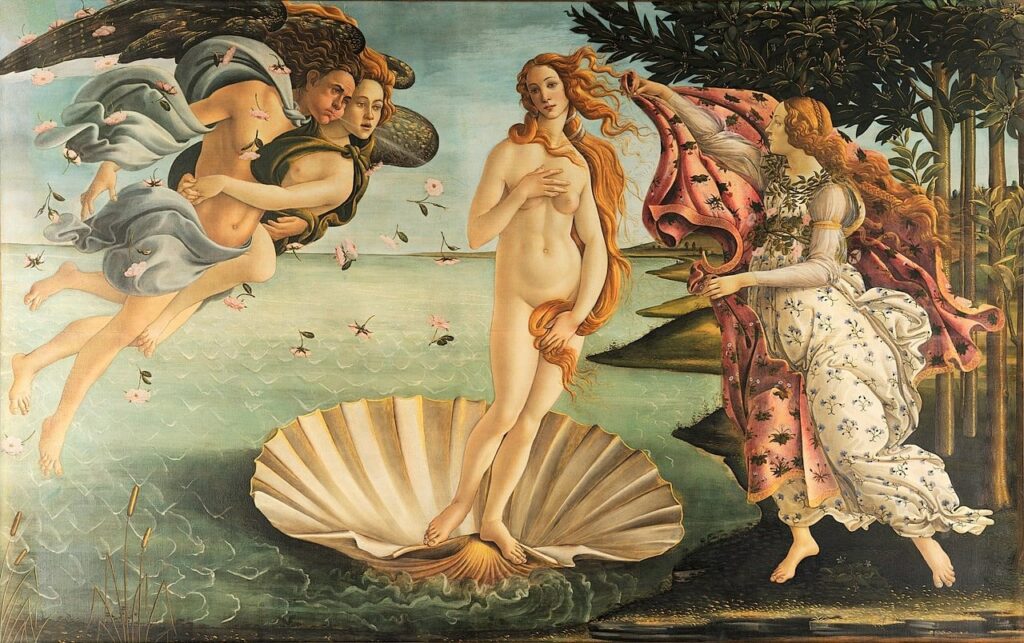

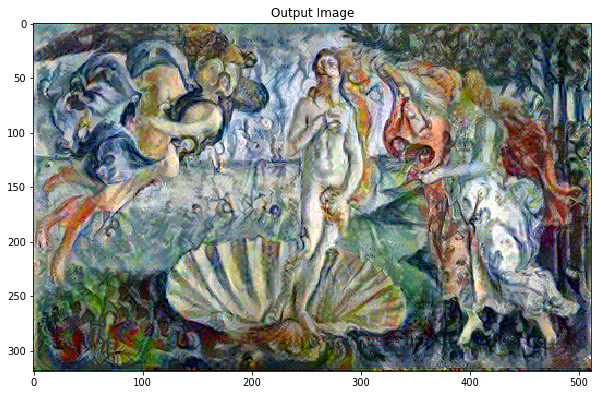

Using just two basic images at a time, we can create masterpieces like this depiction of Botticelli’s Birth of Venus, had it been painted by Marc Chagall 4 centuries later.

For our example, we need two basic images that we want to “blend” together. The first represents the content we want to keep. In my case, I’ll be using this well-known painting of The Birth of Venus by Sandro Botticelli:

The second image will contain the style we want to keep and apply to the first.

Here, I’ve chosen the incomparable style of iconic 20th-century painter Marc Chagall, thanks to one of his works entitled Life.

Image loading and pre-processing

First, we’ll load and prepare the data, applying the same pre-processing process we used to train the VGG networks. Then we’ll also create a function that performs the reverse processing so that we can display our final image.

How do you determine the style and content of an image?

When a convolutional neural network (such as VGG19) is trained to perform image classification, it must understand the image.

Unlike ‘classic’ Machine Learning, in Deep Learning, features are generated by the network itself during back-propagation learning.

During training, the neural network uses the pixels of the supplied images to build up an internal representation through various transformations. Once the network has been trained, the raw input image is transformed into a succession of complex features present in the image, in order to predict the class to which it belongs.

Thus, in the intermediate layers of the network, it is possible to extract some of the characteristics of an image in order to describe its content and style.

In order to obtain both the content and the style of our images, we will therefore extract certain intermediate layers from our model. Starting with the input layer of the network, the first layer activations represent low-level features such as edges and textures. The last layers represent higher-level features, such as ears or eyes.

So we’ll use one of the last layers to represent content, and a set of 5 layers (the first of each block) to represent image style.

We load the pre-trained model with its weights and create a new model that takes an image as input and returns the intermediate layers corresponding to the image’s content and style.

Definition and creation of loss functions

Our definition of the loss function for content is quite simple. We transmit to the network both the image of the desired content and our basic input image.

This will allow us to return the outputs of the intermediate layers of our model. Then we simply take the mean square error between the two intermediate representations of these images.

The purpose of this loss function is to ensure that the generated image retains some of the “global” characteristics of the content image.

In our example, we want to ensure that the generated image restores the pose of the goddess standing in a shell, as well as the figures surrounding her.

This means that shapes such as bodies, hair and the shell must be recognizable.

We backpropagate in the usual way to minimize this loss of content. We therefore modify the initial image until it generates a similar response at the output of the chosen intermediate layer to that of the original content image.

For the style, due to the number of layers, the loss function will be different.

Instead of comparing the raw intermediate layer outputs of the base input image and the style image, the authors of the original paper use the difference between the Gram matrices of the selected layers.

The Gram matrix is a square matrix containing the products of the points between each layer’s vectorized filter. The Gram matrix can therefore be seen as a non-normalized correlation matrix for the filters in a layer.

To generate a style for our input image, we perform a gradient descent from the content image to transform it into an image that will copy the style of the style image.

All that remains is to create a function to load our various images, pass them through our model to return the content and style representations, and then calculate the loss functions and gradient in order to move on to optimization.

And that’s it!

We can then apply the method to a number of images to test our transfer style. Here are a few examples:

Would you like to learn more about Deep Learning? Start one of our Data Scientist courses soon!