When designing a deep neural network, several types of first-level architecture can be used. One of these is the dense neural network. So what is it? And how does it work? Find out in this article.

What is a dense neural network?

A dense neural network is a machine learning model in which each layer is deeply connected to the previous layer.

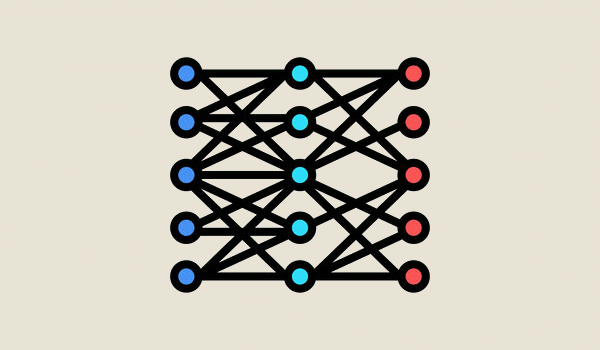

As a reminder, layers are made up of nodes. Nodes combine inputs from a dataset with a weighted coefficient, to increase or decrease their value. In doing so, they perform calculations on the data.

If we take the example of a stadium, the nodes are the seats, the layers are the rows of seats. And the whole makes up the architecture of the model itself (or the stadium in its entirety). Within a model, each layer has its own importance, depending on its characteristics and functionalities. Some are used for time series analysis, others for image processing, and still others for natural language processing.

Returning to the dense neural network, the neurons in one layer are connected to all the neurons in the previous layer. Because of this ultra-connectivity, this is the model most commonly used in artificial neural networks.

Good to know: even though we speak of a dense neural network, dense layers (or deeply connected layers) are not necessarily used from the beginning to the end of the learning process.

They are often only used in the final stages of the neural network. In this case, other types of layers will be used.

How does the dense neural network work?

In a dense neural network, the dense layer receives an output from the neuron in the previous layer. The input data thus transmitted is in the form of a matrix.

And to facilitate the connection between all layers, a vector multiplication of the matrix is applied. This allows the output to be modified and the next step to be taken. But please note that matrix-vector multiplication means that the row vector of the output is equal to the column vector of the dense layer.

In other words, for this to work, there must be as many columns between the two vectors. It’s this whole process that enables the network to create connections between the available data values.

To help you better understand how the dense neural network works, let’s take a look at its implementation using the Keras tool.

How to implement the dense neural network with Keras?

Keras API

To set up a dense neural network, you need a high-performance tool. One of the most commonly used is Keras.

This is a Python API that runs on the Tensorflow machine learning platform. This API enables users to add several pre-built layers to different neural network architectures. Thanks to its intuitive interface and rapid deployment in production, Keras facilitates the overall experience on TensorFlow.

For example, to create a machine learning model, the various Keras layers need to be stacked. It is then possible to use a single dense layer to design a linear regression model. Or several dense layers to create a truly dense neural network.

Good to know: the Keras API contains different types of layers, such as output layers, activation layers, integration layers and, of course, dense layers. Let’s take a look at the different parameters used for the latter.

Keras hyperparameters

To design a dense neural network, Keras provides its users with a complete syntax, made up of several hyperparameters and attributes. Here are the most important ones:

- Units: defines the size of the dense layer output. It is always a positive integer.

- Activation: transforms neuron input values. By introducing non-linearity, neural networks are able to understand the relationship between input and output values.

- The kernel weight matrix: this is the heart of the neural network. This matrix is used to multiply input data and extract its main characteristics. Here, several parameters can be used to initialize, regularize or constrain the matrix.

- The bias vector: these are the additional data sets that require no input and correspond to the output layer. As such, it is set to 0. Here again, several parameters can be applied (initialization, constraint and regularization).

Things to remember

- In a dense neural network, the results of the previous layers are transmitted to the dense layer.

- There is thus hyperconnection between the different layers making up the architecture of the learning model.

- Several tools are available to implement this learning model. Keras, for example, provides a complete syntax. This makes the work of data scientists much easier.

- That said, to fully master the design of a dense neural network, it’s best to train in data science.