You Only Look Once or YOLO is an algorithm capable of detecting objects at first glance, performing detection and classification simultaneously. Find out all you need to know about this revolutionary approach to AI and Computer Vision!

Object detection is one of the mainstays of computer vision. The ability to detect and classify objects in images and videos is essential for many modern technologies.

These include autonomous vehicles, surveillance systems, robotics and even generative artificial intelligence.

After years of research aimed at improving the performance and efficiency of detection systems, it was in 2016 that an innovative algorithm introduced by Joseph Redmon changed everything: YOLO, You Only Look Once.

Back to the origins of object detection systems

Object detection is a fundamental task of Computer Vision. It is the foundation of a multitude of recent innovations, such as facial recognition, augmented reality, automated surveillance and even autonomous driving.

In the past, the most widely used techniques were mainly based on traditional multi-step approaches. These methods were effective, but had major drawbacks in terms of processing speed.

For example, Region-Based Convolutional Neural Networks (or R-CNN) consisted in initially proposing regions of interest (Rols) from the image. This was achieved mainly by using segmentation or contest detection algorithms.

Each Rol was then resized for input to a classifier such as a convolutional neural network (CNN). The aim was to determine whether an object was located in a region.

At the time, this technique was a significant advance, but suffered from considerable slowness due to the large number of candidate regions to be evaluated.

To overcome these limitations, Fast R-CNN was born. It enabled ROls to be classified directly from a shared CNN feature map, rather than using separate classifiers.

Despite the speed gain over R-CNN, the selection process remained slow and complex. This is why the Faster R-CNN approach was created, introducing the proposal of regions of interest by a network.

This development further automated and accelerated the generation of Rols, but remained hopelessly slow due to the multiple sequential steps required for object detection.

In addition to a lack of speed, these different methods had several shortcomings, such as computational complexity and difficulty of scaling. This severely limited their applicability in scenarios requiring real-time detection.

Their effectiveness also depended on the quality of the proposed regions of interest, which could lead to detection errors if important regions were missed.

For all these reasons, it was high time for a new technique to shake things up in this field. And that’s precisely what has happened with YOLO.

What is You Only Look Once?

If You Only Look Once marked a decisive turning point, it was because of its innovative approach. By simultaneously detecting and classifying objects in a single pass through a convolutional neural network, it combined real-time speed and accuracy.

Its pipelined architecture, combined with specific region-of-interest mechanisms, outperformed all previous methods and rendered them obsolete.

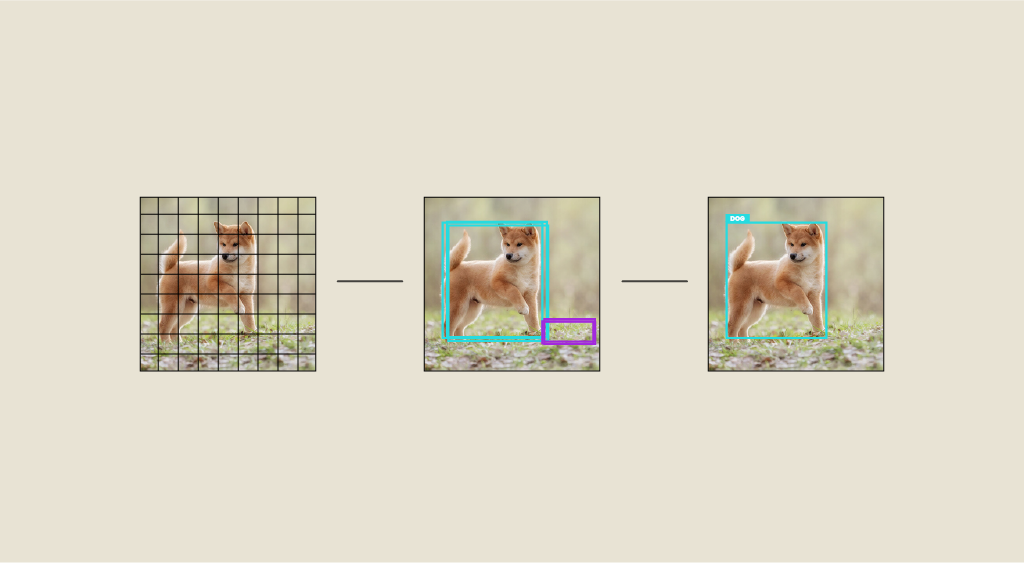

Indeed, its structure is fundamentally different from traditional techniques. Instead of proposing regions of interest in a first step, it divides the input image into a grid of cells, each of which is responsible for predicting the coordinates of the bounding boxes for the detected objects, as well as their probabilities of belonging to different classes.

In simple terms, each cell predicts a set of bounding boxes and the corresponding confidence scores for each class, based directly on features extracted by the CNN. This eliminates the need to go through the image several times.

This use of a convolutional neural network to extract features from the input image is at the heart of YOLO. The CNN consists of several convolutional layers and pooling, to capture useful patterns and features at different spatial scales.

This enables relevant object representations to be learned automatically and convolution operations to be performed efficiently, greatly reducing computational costs.

Another key feature is the use of “regions of interest” or “anchors”, which are predefined bounding boxes of different sizes and shapes that serve as a reference for predictions.

Each grid cell is associated with a certain number of anchors, helping YOLO to generalize detections over different types of objects and scales. This greatly enhances detection accuracy.

What are the advantages?

Clearly, You Only Look Once offers several significant improvements over previous object detection methods. This is what has enabled it to become one of the most widely used algorithms for computer vision.

Its main strength is its ability to detect objects instantaneously, and to reduce the total number of calculations required.

Resource utilization is optimized, since shared features are calculated only once.

YOLO also stands out for its performance and accuracy. Its pipelined approach enables it to generalize on objects of various shapes, making it robust in the face of a wide variety of scenarios.

It also excels at high-resolution image processing, since its efficient architecture enables it to handle larger image sizes without sacrificing speed.

This is a real asset for applications such as aerial or satellite detection.

What's it for? What are the applications?

Thanks to its flexibility and efficiency, You Only Look Once has found its way into a wide variety of fields and applications, including autonomous vehicles, to identify and track pedestrians, other vehicles, road signs and any potential obstacles on the road in real time.

This enables control systems to react instantly to changes to ensure safe driving.

For video surveillance, YOLO can detect suspicious activity, intruders or abandoned objects even in crowds. It can also be used to locate lost people or animals in hard-to-reach places. It can also be used for security checks at airports, railway stations and other infrastructures.

Its activity recognition capability can also be useful for detecting and tracking the movements of individuals in video sequences. This is a relevant use case for the surveillance of large spaces such as stadiums, shopping malls or during major sporting events.

Another application example is traffic management. The algorithm can be used for automatic recognition of license plates and faces.

In the field of medicine, You Only Look Once is used to detect anomalies or specific objects in medical images such as X-rays, MRIs or scans.

It can help diagnose diseases early by speeding up the image analysis process. This can be vital in medical emergencies.

Conclusion: YOLO, one of the driving forces behind the new wave of AI

By enabling instantaneous object detection for the first time, You Only Look Once has opened up a myriad of new possibilities for computer vision. It is one of the innovations that have ushered AI into a new era.

To learn how to handle the best AI algorithms, you can choose DataScientest. Our various Data Science training courses include modules dedicated to AI and its various branches.

In particular, you’ll learn about Machine Learning techniques, reinforcement learning, neural networks and tools like Keras, TensorFlow and PyTorch.

Through our courses, you can acquire all the skills required to become a Data Analyst, Data Scientist, Data Engineer, Data Product Manager or Machine Learning Engineer.

At the end of the course, you can obtain a state-approved “Project Manager in Artificial Intelligence” certification, a diploma from Mines ParisTech PSL Executive Éducation, and a certificate from our cloud partners AWS and Microsoft Azure. Discover DataScientest!