Graph Neural Networks (GNNs) are special neural networks used to solve difficult problems using graphs as a complex data structure. Find out more about how GNNs work, their architectures and applications below.

What are Graph Neural Networks?

With the exponential development of data science, Machine Learning has also undergone new changes to adapt to changes in data structures and demand. For example, with the emergence of new types of data and the proliferation of unstructured data, it has become necessary to create machine learning models capable of adapting to unstructured data such as graphs.

This is the case, for example, with Graph Neural Networks (GNN), which are capable of manipulating data from a graph. GNNs are thus an extension of conventional neural networks from Deep Learning, which are used to process structured data. Graphs are useful complex unstructured data structures that can be used to represent real phenomena such as aerial road navigation systems and molecular structures.

Graph Neural Networks: What is a graph?

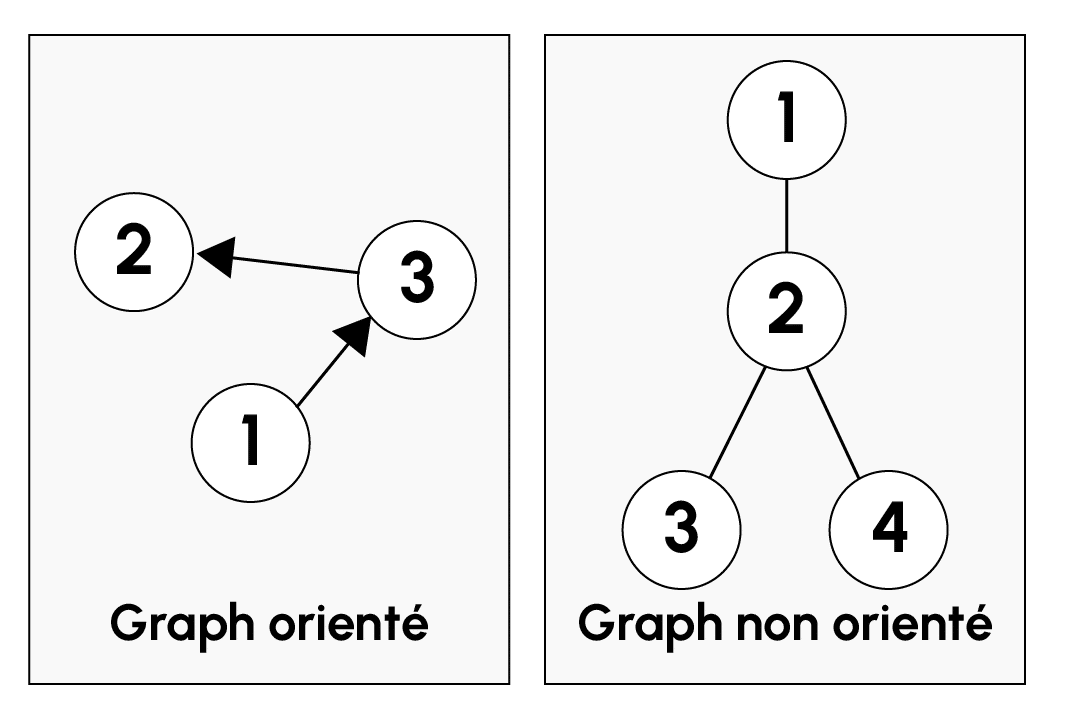

A graph is a complex data structure used to represent objects and the relationships between them. A graph is made up of a set of nodes and edges or links. Edges are used to link the various nodes together. Edges can be directed or undirected. In a directed graph, the edges have a direction which indicates the direction of the relationship, whereas in an undirected graph, the edges have no direction.

More concretely, nodes can represent users of a social network, while links correspond to friendships between users. A graph can also be seen as the structure of a molecule, or a transport network.

In the case of GNNs, a graph uses embeddings to encode all the information relating to a graph, such as its structure and relationships. The embeddings build a numerical representation of a graph that can then be used to train Machine Learning algorithms.

There are two types of embedding in a graph: node embeddings and edge embeddings. Node embeddings are used to represent the properties of each node, while edge embeddings are used to model the properties of relationships between nodes.

💡Related articles:

| Image Processing |

| Deep Learning – All you need to know |

| Mushroom Recognition |

| Tensor Flow – Google’s ML |

| Dive into ML |

How do graphical neural networks work?

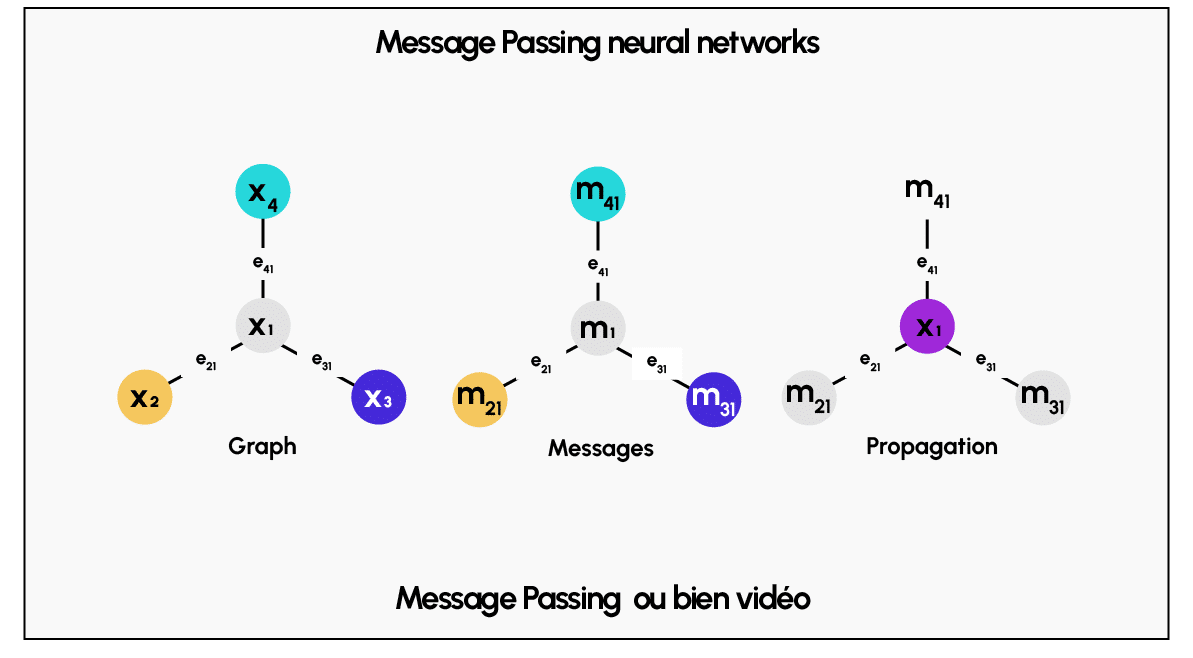

The operation of graphical neural networks is based on a technique known as “message passing”. This technique enables information to be processed through an exchange of information between the nodes of a graph and their neighbours. The aim of this technique is to modify the state of each node using local information from its neighbours and information contained in the edges.

More specifically, message passing transmits the information contained in the node embeddings via the edges of the graph. Each node sends a message to its neighbours using its node embedding. Each message contains information about the sending node and properties specific to the edge linking the node to its neighbour. The receiving node then combines all the messages from its neighbours with its initial embedding and the corresponding edge embeddings to update its own node embedding using a non-linear function. The combination of messages from neighbouring nodes and the initial embedding can correspond to different operations (sum, average, maximum, etc.) depending on the context.

The message passing technique is repeated several times to allow each node to integrate the information from its neighbours and thus capture more and more information about the structure of the graph.

At the end of the process, each node contains an updated node embedding that takes into account all the characteristics of its neighbours and the edge embeddings. In the embedding space, which corresponds to the vector space in which the node embeddings are grouped, the distance between node embeddings depends on their similarity. The more similar the node embeddings, the closer they are.

It should also be noted that message passing functions are invariant to permutation of the order of neighbour nodes. This allows GNNs to consider interactions between nodes without depending on the order of the nodes, and to standardise the results obtained.

To train GNNs or Graph Neural Networks, random weight values are first assigned to each layer of the neural network. Then, through a cost function adapted to the problem to be solved and minimised, the weights are adjusted on the different layers of the network. Through the passing message function, the weights of the hidden layers of the GNN are modified accordingly.

Another technique used for Graph Neural Networks is pooling, but its application varies according to the architecture used. After updating the embeddings of the nodes after multiple iterations of message passing, it may be useful to reduce the size of the numerical representation of the graph in order to increase the capacity of the models to handle large graphs.

This is where pooling comes into its own, as it produces a global embedding associated with this particular region of the graph. This global embedding is constructed from a combination of the node embeddings of a specific region of the graph.

What are the different GNN architectures?

There are different types of GNN architectures. Here is a description of the best-known architectures:

1. MPGNN (Message Passing Graph Neural Networks). MPGNNs use message passing to update each of the nodes using the information contained in each of the neighbouring nodes.

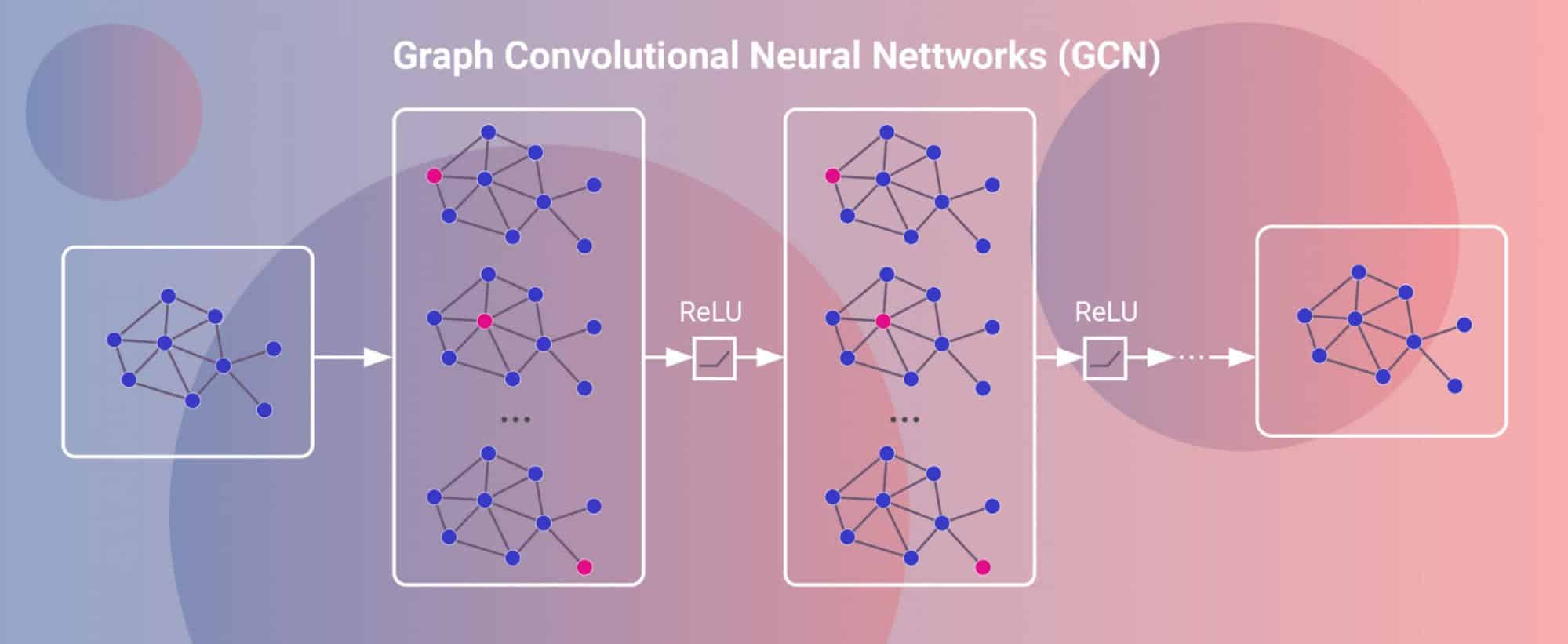

2. GCNNs (Graph Convolutional Neural Networks) also known as graph convolution neural networks. These networks apply a convolution layer to the embeddings of nodes and edges.

3. GATs (Graph Attention Neural Networks) also known as graph attention neural networks. This type of architecture weights the importance of neighbouring nodes during the information propagation stage (message passing). An attention score is calculated for each pair of nodes and used to weight each node.

What are Graph Neural Networks / GNNs for?

Graph Neural Networks can be used for a wide range of applications. Here are a few examples:

- Node Classification: GNNs can be used to classify the nodes of a graph. For example, it could be used to classify users of an e-commerce site according to their interests.

- Graph Classification: GNNs could be used to classify graphs. For example, GNN could be used to predict the type of molecule based on its structure (represented by a graph).

- Recommender system: GNNs are used to recommend content to users based on their data. This is the case, for example, for a music streaming site such as Spotify, which uses GNNs to recommend similar artists, songs, playlists and albums to a user based on the user’s history.

- Natural Language Processing (NLP): GNNs can be used for text classification or text generation. For example, GNN can be used to perform sentiment analysis on reviews linked to a website. Each review will be classified according to its tone (positive, negative or neutral).

- Link Prediction: GNNs would be used here to predict new links between nodes in a graph. For example, in a social network, GNN could be used to predict whether users are likely to become friends.

- Computer Vision: GNNs are used to process images like graphs. More specifically, they can be used for object detection or character recognition.

- Clustering: GNNs are used to group images according to their content. This is achieved by separating the embedding space. This is the space in which the node embeddings are located.

To find out more about Graph Neural Networks, take a look at the following video:

Graph Neural Networks - Conclusion

Graph Neural Networks are therefore very useful for dealing with complex problems by manipulating graphs, which are complex unstructured data structures. GNNs operate mainly through the message passing function, which enables node embeddings to be constructed to suit the structure and information of the graph.

GNNs come in a variety of architectures, including MPGNN, GCNN and GAT. GNNs can be used in many contexts, including computer vision, link prediction, recommendation and NLP. Finally, as GNNs are still in the research phase, it is possible that new architectures will be developed. Given their potential and power, GNNs will be used more and more frequently to solve problems.

If you’d like to learn more about deep learning and neural networks, take a look at our Machine Learning Engineering and Deep Learning courses.