In a paper called ‘An Embarrassingly Simple Approach for Trojan Attack in Deep Neural Networks’ published by Texas A&M University, researchers highlight a new kind of computer attack: TrojanNet attacks. These attacks, targeted against machine learning models and more specifically against the deep neural networks of deep learning algorithms, are revolutionary because they are so easy to carry out, and therefore so accessible to even the smallest hacker.

How does this attack work?

What are the dangers of this attack?

How can we protect ourselves?

Here’s an overview of this new attack, which is raising many questions.

TrojanNet attacks: how they work

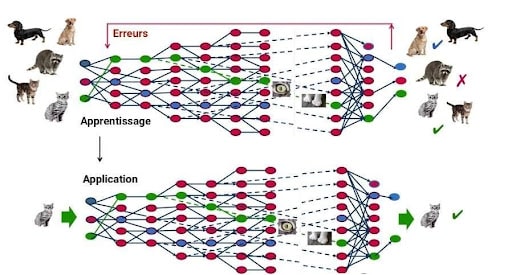

TrojanNet attacks target the deep neural networks (DNN) of Deep Learning models. Deep Learning is currently the most common Machine Learning technique. Inspired by the workings of the human brain, it is based on a network of artificial neurons. This network can contain more or fewer layers, and the more layers there are, the deeper the network.

To understand how TrojanNet attacks work, we need to look at the classic attacks against DNNs in deep learning models.

These are known as Backdoor Trojan attacks. In other words, a computer is taken over remotely, via a ‘Trojan horse’, which is malicious software that nevertheless takes the form of harmless software.

What is the aim of these attacks?

The hacker will access the machine learning model, and re-train it to react to hidden triggers. This will have the dual effect of distorting the model’s results if these triggers are hidden in the image, and reducing the model’s overall accuracy.

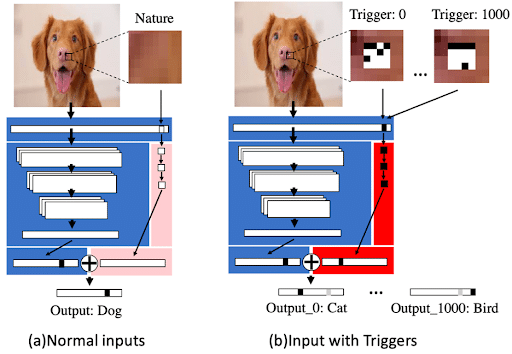

TrojanNet attacks have the same objective: to make the Deep Learning model react erroneously if the triggers are hidden in the image. But they work differently. Instead of re-training the model, the hacker creates a parallel mini Deep Neural Network, trained to spot and react to these hidden triggers. Then, he combines the output of his model with the output of the existing model.

TrojanNet attacks have several advantages over Trojan backdoor attacks.

- Firstly, the hacker doesn’t need to access the parameters of the existing model, which is often particularly complex.

- In addition, these attacks do not alter the overall accuracy of the existing model, making them more difficult to detect.

- Finally, TrojanNet attacks can be set on triggers over 100 times faster than traditional attacks. So, whereas conventional Trojan attacks were particularly resource-intensive and time-consuming, TrojanNet attacks can be carried out by basic computers.

- As a result, this attack has become extremely accessible to even the smallest hacker.

Threats and ways to defend against them

The widespread availability of TrojanNet attacks, coupled with our society’s increasing dependence on artificial intelligence models, represents a real danger.

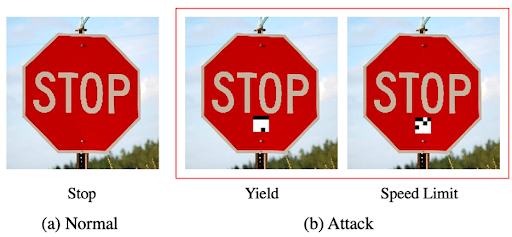

Deep learning models are being used in an ever-increasing variety of sectors, from medical diagnostics to space exploration to autonomous cars. If a deep learning model running an autonomous car is attacked, it could well confuse a stop sign with a speed limit sign.

This attack is all the more problematic as it is very difficult to detect. As the attack is fairly recent, there is as yet no effective detection technique. While traditional detection tools can detect Trojan Backdoor attacks, by detecting digital traces of malware in binary files, they are no longer effective in the face of new TrojanNet attacks. However, a great deal of research is underway to develop tools and techniques enabling Artificial Intelligence models to be more resistant to these attacks.

TrojanNet attacks are therefore probably a revolution in attacks on Machine Learning models. Data Scientists and Data Engineers build and put into production predictive artificial intelligence models, and are therefore particularly concerned by this new attack. If you’d like to find out more about how these models work and how to protect them, take a look at our training courses in the data profession via this link.