In recent years, explainability has been a recurring but still niche issue in machine learning. The Grad-CAM method is a solution to this problem.

More recently, interest in this subject has begun to accelerate. One of the reasons for this is the growing number of machine learning models in production.

On the one hand, this translates into a growing number of end users who need to understand how the models make their decisions. On the other hand, a growing number of Machine Learning developers need to understand why (or why not) a model works in a particular way.

Convolutional neural networks have proved very effective in tasks such as image classification, face recognition and document analysis. But as efficiency and complexity have increased, the interpretability of these algorithms has gradually decreased. A solution to problems such as face recognition involves hundreds of layers and thousands of parameters to train, making it difficult to read, debug and trust the model. CNNs appear as black boxes that take inputs and give outputs with great precision without giving any intuition as to how they work.

As data scientists, it is our responsibility to ensure that the model works correctly. Suppose we are given the task of classifying different birds. The dataset contains images of different birds and plants/trees in the background. If the network is looking at the plants and trees instead of the bird, there’s a good chance that it will misclassify the image and miss all the features of the bird. How can we tell if our model is looking at the right thing? In this article, we will look at one approach to identifying whether the CNN is working correctly with features that are important for classification or recognition.

💡Related articles:

The Grad CAM method

One way to ensure this is to visualise what CNNs are actually looking at, using the Grad-CAM method.

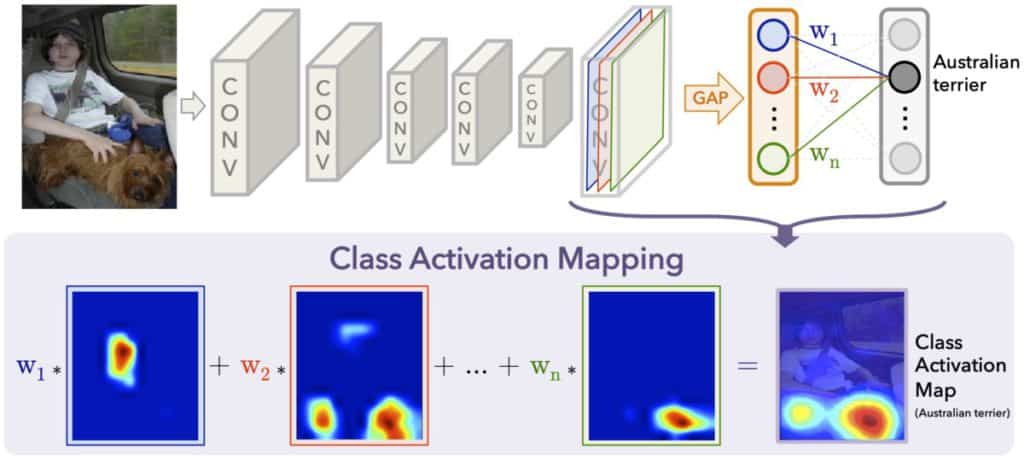

The gradient-weighted class activation map (Grad CAM) produces a heat map that highlights important regions of an image using the target gradients (dog, cat) of the final convolutional layer.

The Grad CAM method is a popular visualisation technique that is useful for understanding how a convolutional neural network has been driven to make a classification decision. It is class-specific, meaning that it can produce a separate visualisation for each class present in the image.

In the event of a classification error, this method can be very useful for understanding where the problem lies in the convolutional network. It also makes the algorithm more transparent.

How does it work?

Grad CAM consists in finding out which parts of the image have led a convolutional neural network to its final decision. This method consists of producing heat maps representing the activation classes on the images received as input. Each activation class is associated with a specific output class.

These classes are used to indicate the importance of each pixel in relation to the class in question by increasing or decreasing the intensity of the pixel.

For example, if an image is used in a convolutional network of dogs and cats, the Grad-CAM visualisation can generate a heatmap for the ‘cat’ class, indicating the extent to which the different parts of the image correspond to a cat, and also a heatmap for the ‘dog’ class, indicating the extent to which the parts of the image correspond to a dog.

For example, let’s consider a CNN of dogs and cats. The Grad-CAM method will generate a heatmap for the cat object class to indicate the extent to which each part of an image corresponds to a cat, and also a heatmap for the dog object class in the same way.

The class activation map assigns importance to each position (x, y) in the last convolutional layer by calculating the linear combination of activations, weighted by the corresponding output weights for the observed class (Australian terrier in the example below). The resulting class activation map is then resampled to the size of the input image. This is illustrated by the heatmap below.

Let’s take the example of the classification of dogs and cats again. The Grad-CAM method will be used to render the thermal zones used to classify each of the objects in our image, producing the following result:

In this article, we have seen a new technique for interpreting convolutional neural networks, which are a state-of-the-art architecture, particularly for image-related tasks. Research in the field of interpretable machine learning is progressing at a rapid pace and is proving to be very important in gaining the confidence of users and contributing to the improvement of models.

Did you enjoy this article? If you are convinced by the importance of Machine Learning today, and by the effectiveness of our Bootcamp training course