Low Rank Adaptation is a technique that makes it easy to adapt a machine learning model to several domains after its initial training on a specific task. Find out everything you need to know about this method and its benefits!

Machine Learning is now used in a wide variety of fields, including speech recognition, computer vision and natural language processing.

It is the foundation for the artificial intelligence revolution, and in particular for tools that have become indispensable, such as ChatGPT and Google Bard.

However, most machine learning systems are specifically designed and trained for particular tasks and specific data sets.

💡Related articles:

| Image Processing |

| Deep Learning – All you need to know |

| Mushroom Recognition |

| Tensor Flow – Google’s ML |

| Dive into ML |

| Data Poisoning |

This is one of the main limitations of AI today, as generalising models to new situations or different domains often proves disappointing and unsuccessful.

In order to transfer an ML model to a new domain and improve its performance, a recent adaptation technique is proving particularly effective: Low Rank Adaptation.

What is Low Rank Adaptation?

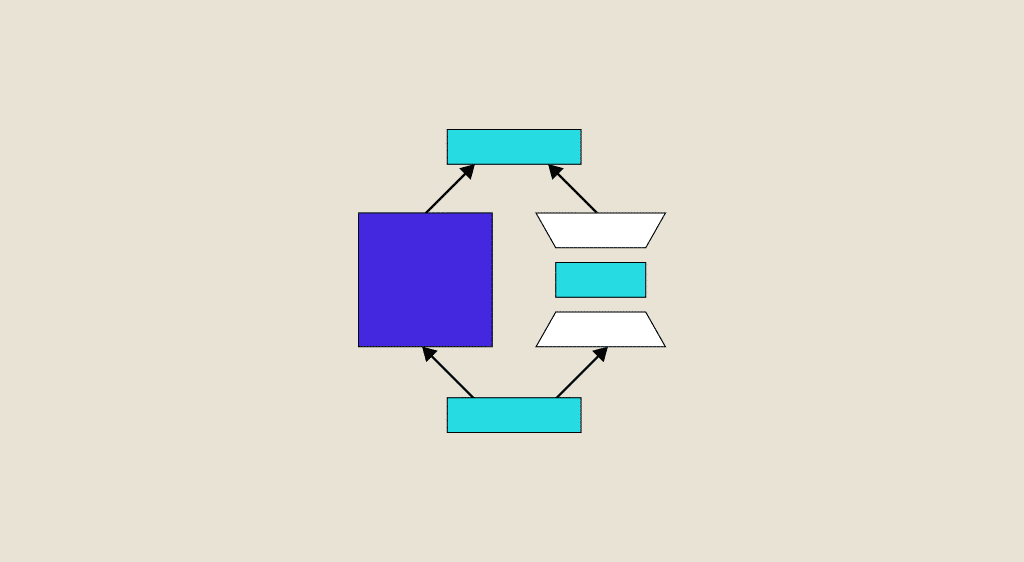

This innovative approach focuses on reducing the dimensionality of data, with the aim of facilitating domain adaptation: the transfer of a model from a source domain where it is trained to a target domain where the data may be different.

A central concept of this method is the low-rank decomposition of matrices. This allows the data to be represented in a more compact form, while preserving its underlying structure.

In the context of machine learning, this decomposition aims to extract the most important features from the training data. The aim is to build a more generalisable model for the target domain.

There are various methods for achieving this. The most commonly used are Singular Value Decomposition (SVD) and Non-Negative Matrix Factorization (NMF).

By reducing the dimensionality of the data, we avoid the overfitting problems that can arise when the models are applied directly to data from different targets. This simplifies knowledge transfer.

One of the main strengths of Low Rank Adaptation is its ability to capture correlations and dependencies between data characteristics. In this way, the information that is crucial for adaptation is better represented.

Another advantage is the ability to apply this approach to different Machine Learning tasks such as classification, regression or even synthetic data generation.

What is it used for? What are the applications?

Low Rank Adaptation is a proven method that has already been adopted in many areas of Machine Learning, and is used in Computer Vision for object recognition in environments different from those used for initial training.

By using low-rank decompositions on representations of image features, ML models can generalise better to new conditions of lighting, viewing angle or environment to considerably improve their performance in the real world.

For NLP or natural language processing, this approach is also proving highly effective for machine translation tasks.

While source and target domain data can vary considerably, low-rank adaptation enables more flexible models to be created by extracting the essential linguistic aspects and applying them to the new domain. This improves translation in different contexts.

Similarly, for speech recognition, Low Rank Adaptation is useful for adapting speech recognition models to specific speakers or different acoustic environments.

Low Rank Decomposition techniques enable systems to better capture variations between speakers and therefore achieve greater accuracy in a variety of situations.

Finally, by integrating this adaptation method into neural network architectures, transfer learning is facilitated. Knowledge acquired in one domain is transferred to a target domain, greatly increasing performance for a wide variety of tasks.

The challenges of Low Rank Adaptation

All the examples discussed in the previous chapter demonstrate the effectiveness of Low Rank Adaptation in overcoming the challenges associated with domain adaptation in Machine Learning.

Despite its many advantages, this approach also presents specific challenges. For example, managing the overfitting and underfitting of models can be difficult.

Too restrictive a low-rank decomposition can lead to a loss of crucial information, while too complex a decomposition can lead to overfitting the training data. Finding the right balance is therefore essential for optimising performance on new data.

Another potential problem is that the source and target domains can be extremely different, resulting in data heterogeneity that makes adaptation very difficult.

The diversity of characteristics between the two domains can affect the model’s ability to transfer knowledge appropriately.

To avoid this inconvenience, low-rank decomposition techniques need to be developed that are capable of capturing the variations while retaining the essential structures.

Finally, in many situations, it is not always easy to obtain labelled data in the target domain. They may be limited, or simply too expensive.

Low Rank Adaptation generally requires a large amount of data to build generalisable models. This requires further research to develop methods that exploit unlabelled or semi-labelled data, such as transductive low-rank adaptation.

Conclusion: Low Rank Adaptation, a step towards the emergence of general AI

By solving the problems associated with domain adaptation, Low Rank Adaptation helps to efficiently transfer the performance of a Machine Learning model to different domains.

As such, this method is a potential route to the development of general purpose AI (GPA) capable of excelling at any task and learning without the need for data. Such an invention is seen by many as the ultimate goal of artificial intelligence research…

To learn how to master Low Rank Adaptation and all the best Machine Learning techniques, you can choose DataScientest.

Our Data Science training courses all include one or more modules dedicated to machine learning. In particular, you will discover classification, regression and clustering techniques with scikit-learn, neural networks and tools such as Keras, TensorFlow and PyTorch.

As you progress through the programme, you will acquire all the skills you need to become a Data Analyst, Data Engineer, Data Scientist or Machine Learning Engineer.

All our programmes can be completed entirely by distance learning, and our state-recognised organisation is eligible for funding options.

At the end of the course, you can receive a diploma issued by MINES Paris Executive Education and a certificate from our cloud partners AWS and Microsoft Azure. Discover DataScientest!

Now you know all about Low Rank Adaptation. For more information about Machine Learning, read our article on Transfer Learning!