Cross-Validation is a method for testing the performance of a Machine Learning predictive model. Discover the most commonly used techniques and how to learn to master them.

After training a Machine Learning model on labeled data, it is supposed to work on new data. However, it’s essential to ensure the accuracy of the model’s predictions in production.

To do this, model validation is necessary. The validation process involves deciding whether the numerical results quantifying hypothetical relationships between variables are acceptable as descriptions of the data.

To evaluate the performance of a Machine Learning model, it’s necessary to test it on new data. Based on the model’s performance on unknown data, we can determine if it is underfitting, overfitting, or “well-generalized.”

One of the techniques used to test the effectiveness of a Machine Learning model is “cross-validation.” This method is also a resampling procedure that allows evaluating a model even with limited data.

To perform cross validation, a portion of the training dataset is set aside in advance. These data will not be used to train the model but will be used later to test and validate the model.

Cross Validation is commonly used in Machine Learning to compare different models and select the most appropriate one for a specific problem. It is both easy to understand, easy to implement, and less biased than other methods. Now, let’s explore the main cross-validation techniques.

The Train-Test Split technique for Cross Validation

The Train-Test Split approach involves randomly splitting a dataset into two parts. One part is used for training the Machine Learning model, while the other part is used for testing and validation.

Typically, 70% to 80% of the dataset is reserved for training, and the remaining 20% to 30% is used for Cross Validation.

This technique is effective unless the data is limited. In cases with limited data, there may be missing information about the data not used for training, leading to highly biased results.

However, if the dataset is large, and the distribution is equal between the two samples, this approach works well. You can either manually split the data or use scikit-learn’s train_test_split method.

The K-Folds method for Cross Validation

The K-Folds technique is easy to understand and quite popular. Compared to other Cross Validation approaches, it generally results in a less biased model.

The key benefit of K-Folds is that it ensures that all observations from the original dataset have the chance to appear in both the training and test sets. This is particularly useful when dealing with limited input data.

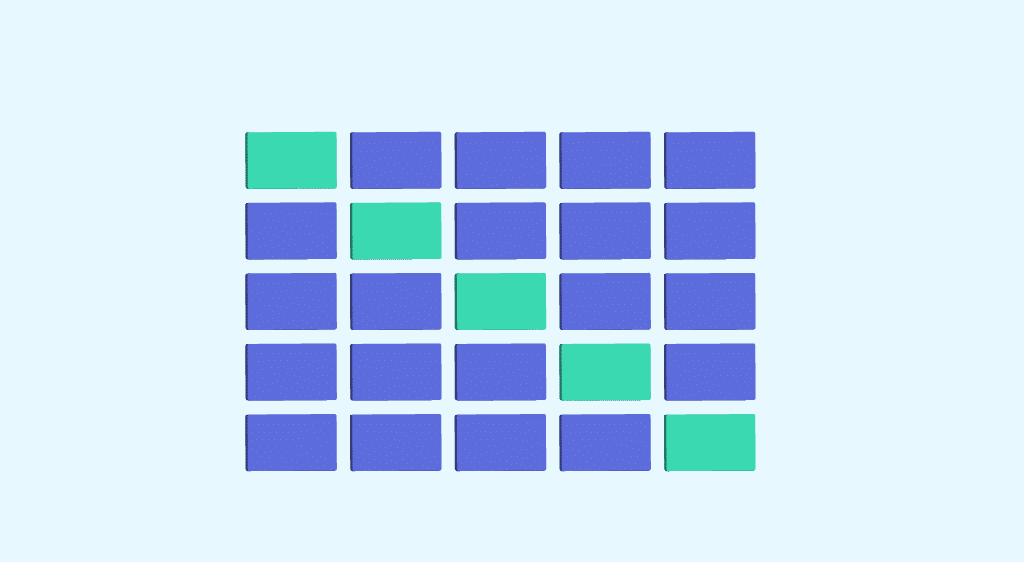

The process starts by randomly dividing the dataset into K folds. The procedure has a single parameter, “K,” representing the number of groups the sample will be split into.

The value of K should not be too low or too high, and a common choice is between 5 and 10, depending on the dataset’s size. For example, if K=10, the dataset is divided into 10 parts.

A higher K value leads to a less biased model, but excessive variance can result in overfitting. A lower value of K is equivalent to using the Train-Test Split method.

The model is then trained using K-1 folds (K minus 1), and it is validated using the remaining K-fold. Scores and errors are recorded.

This process is repeated until each K-fold has been used as the test set. The average of the recorded scores is the model’s performance metric.

You can perform this process manually or use Python’s Scikit-Learn library functions like cross_val_score and cross_val_predict. The cross_val_score function provides the score for each test fold, while cross_val_predict predicts the score for each observation in the input dataset when it was part of the test set.

If the model (estimator) is a classifier, and the target variable (y) is binary or multiclass, the “StratifiedKfold” technique is used by default. This method ensures stratified folds, maintaining the percentage of samples for each class in all folds. This ensures an even distribution of data in both training and test folds.

In other cases, the K_Fold technique is used by default to split and train the model. The folds can be used as iterators or in a loop to perform training on a Pandas DataFrame.

How can I learn to use Cross-Validation?

Cross Validation is an essential step in the Machine Learning process. To learn how to use it, you can consider DataScientest’s training programs.

Machine Learning and all its techniques are at the core of our Data Scientist, Data Analyst, and ML Engineer pathways. You will learn the entire process, algorithms, tools, and methods for training models and putting them into production.

Our professional training programs enable you to acquire all the skills required to work in the field of Data Science. You will also learn to master the Python programming language, database manipulation, Data Visualization, and Deep Learning.

All our courses can be taken as continuous training or in a BootCamp format. We offer an innovative “blended learning” approach that combines remote and in-person training to provide the best of both worlds.

These programs are designed by professionals and tailored to meet the practical needs of companies. Learners receive a diploma certified by the University of Sorbonne, and 93% of them found employment immediately. Discover DataScientest’s training programs!

You know all about Cross-Validation. Discover our complete dossier on Machine Learning and our introduction to the Python language.