Adversarial robustness is dedicated to enhancing the security of machine learning models by making them resilient against malicious attacks. It focuses on creating defense mechanisms and security improvement techniques to ensure that these models can perform effectively even when faced with adversarial threats, thereby guaranteeing the reliability and security of artificial intelligence systems.

In the realm of prevalent machine learning methodologies, significant advancements have given rise to state-of-the-art models capable of achieving extraordinary tasks. Deep learning, an influential machine learning approach, has transformed multiple sectors ranging from computer vision to natural language understanding. However, despite these outstanding accomplishments, deep learning models, particularly those reliant on gradient optimization, are often susceptible to adversarial attacks. This susceptibility has spawned a vital area of research known as adversarial robustness, which seeks to devise methods to bolster the resilience of machine learning models against such threats.

What is adversarial training?

Adversarial training stands as a cornerstone strategy to combat the challenge of robustness. It involves enriching the learning process with intentionally designed adversarial examples. These are inputs crafted specifically to mislead the machine learning model. Introducing the model to these adversarial examples during its training phase makes it more robust, enabling it to accurately predict outcomes even when confronted with similar adversarial inputs in real-life situations.

The core principle of adversarial training is to compile a comprehensive dataset that encompasses both clean and adversarial examples. Throughout the training phase, the model encounters both varieties of examples, compelling it to not only discern patterns within the data but also to detect and withstand disturbances. This iterative exposure gradually enhances the model’s resistance, allowing it to effectively generalize the defense mechanisms it has learned to new inputs.

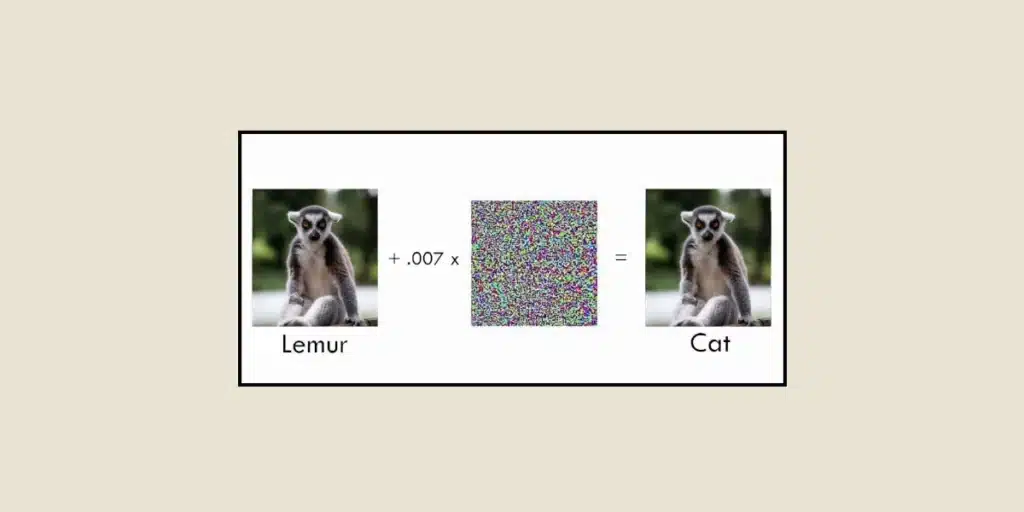

One of the hurdles in crafting robust models is the creation of effective adversarial examples. These examples must be ingeniously designed to exploit the model’s vulnerabilities, yet remain imperceptible to human observers. Various techniques for generating adversarial examples have been developed by researchers, such as the Fast Gradient Sign Method (FGSM) and its adaptations. These methods leverage the gradients of the model concerning the input to incrementally adjust the input in a manner that amplifies the model’s prediction error.

Some examples:

Adversarial robustness is especially critical in image-related tasks. Image classification models, for instance, find extensive applications across autonomous driving, medical imaging, and security systems. Yet, these sophisticated image classification models can be deceived by nearly undetectable disturbances introduced into the input image, leading to classification inaccuracies. Adversarial robustness methodologies strive to address this issue by training models to be immune to such image-based adversarial assaults.

Moreover, adversarial robustness can be adapted to chatbots, to circumvent the infamous case of Tay, a chatbot rolled out by Microsoft on Twitter for user interaction and conversational learning. Malicious users manipulated the system by providing Tay with derogatory and offensive inputs, which culminated in the chatbot adopting inappropriate behavior. Merely sixteen hours post-launch, Microsoft had to shut down Tay, which had acquired racist and homophobic behaviors.

Nevertheless, current research has led to the development of sophisticated defense measures, inclusive of training with various perturbations. These methods enhance the model’s capability to resist adversarial threats by adding extra layers of defense and leveraging the statistical characteristics of the data. The domain of adversarial robustness is rapidly progressing, with researchers diligently working to improve defense effectiveness against adversarial threats. The latest advancements include combining adversarial training with other regularization methods, employing ensemble approaches to benefit from the diversity of multiple models, and integrating generative models to more accurately apprehend the underpinning data distribution.

Conclusion

Though deep learning models have witnessed notable success across different sectors, their vulnerability to adversarial assaults remains a significant concern. Adversarial robustness, through strategies like adversarial training, seeks to enhance the resilience of machine learning models against such attacks. By training models with meticulously crafted adversarial examples, researchers are devising means to fortify these models and ensure their dependability in practical scenarios. The field continues to evolve and holds the promise of producing more robust and secure machine learning models, facilitating the broader implementation of artificial intelligence in critical applications.

Keen on delving deeper into the challenges of artificial intelligence? Interested in mastering the Deep Learning methodologies discussed in this article? Discover our Data Scientist training.