One-Hot Encoding is a technique that allows categorical data to be converted into numerical vectors that can be utilized by machine learning models. Discover everything you need to know about this method!

Machine learning algorithms are incredible computational tools, but they have a major limitation: they do not comprehend text. For these systems, words like “blue,” “Paris,” or “cat” are merely noise. However, in real-world datasets, categorical variables are prevalent. Product names, marital status, country, type of credit card… These non-numeric columns can make up to 40% of the data processed in AI use cases.

To make them interpretable by our models, they must be translated. One of the simplest and most popular methods for this is One-Hot Encoding: a technique that transforms each category into a readable, unambiguous binary vector. Despite its simplicity, it is a double-edged sword: useful, yet sometimes cumbersome. Let’s explore why, when, and how to use it effectively!

Categorical variables: a ubiquitous challenge

They appear in every data table, although they often go unnoticed at first glance: categorical variables are the columns that don’t consist of numbers, but rather names, types, statuses. For instance, if you have a “color” column with values “red,” “green,” “blue,” you’re dealing with a categorical variable.

The problem? Machine learning algorithms, whether they are linear, tree-based, or neural networks, can only handle numbers. If you leave strings in your data, you can be assured that your models will fail, or worse, learn incorrectly.

Simply converting these strings into arbitrary numbers (“red” = 1, “green” = 2, etc.) is not enough. In such a case, the model interprets these numbers as having a hierarchy or distance, which is often incorrect. This is why encoding is a vital step in preprocessing! One-Hot Encoding is often the default solution. But before diving in, let’s thoroughly understand its principle.

The principle that transforms text into vectors

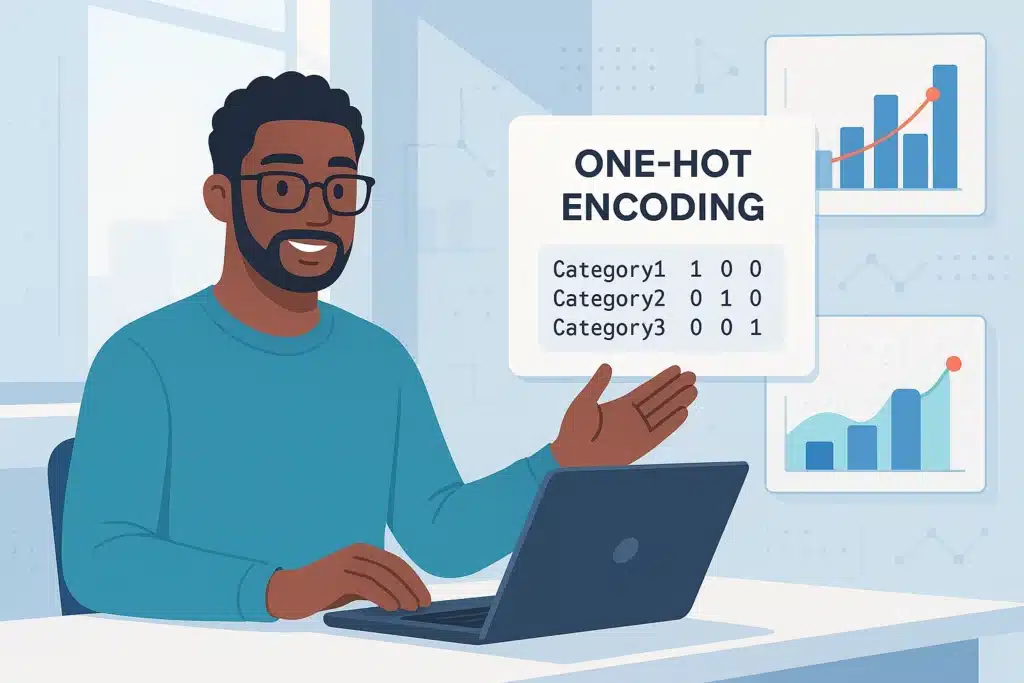

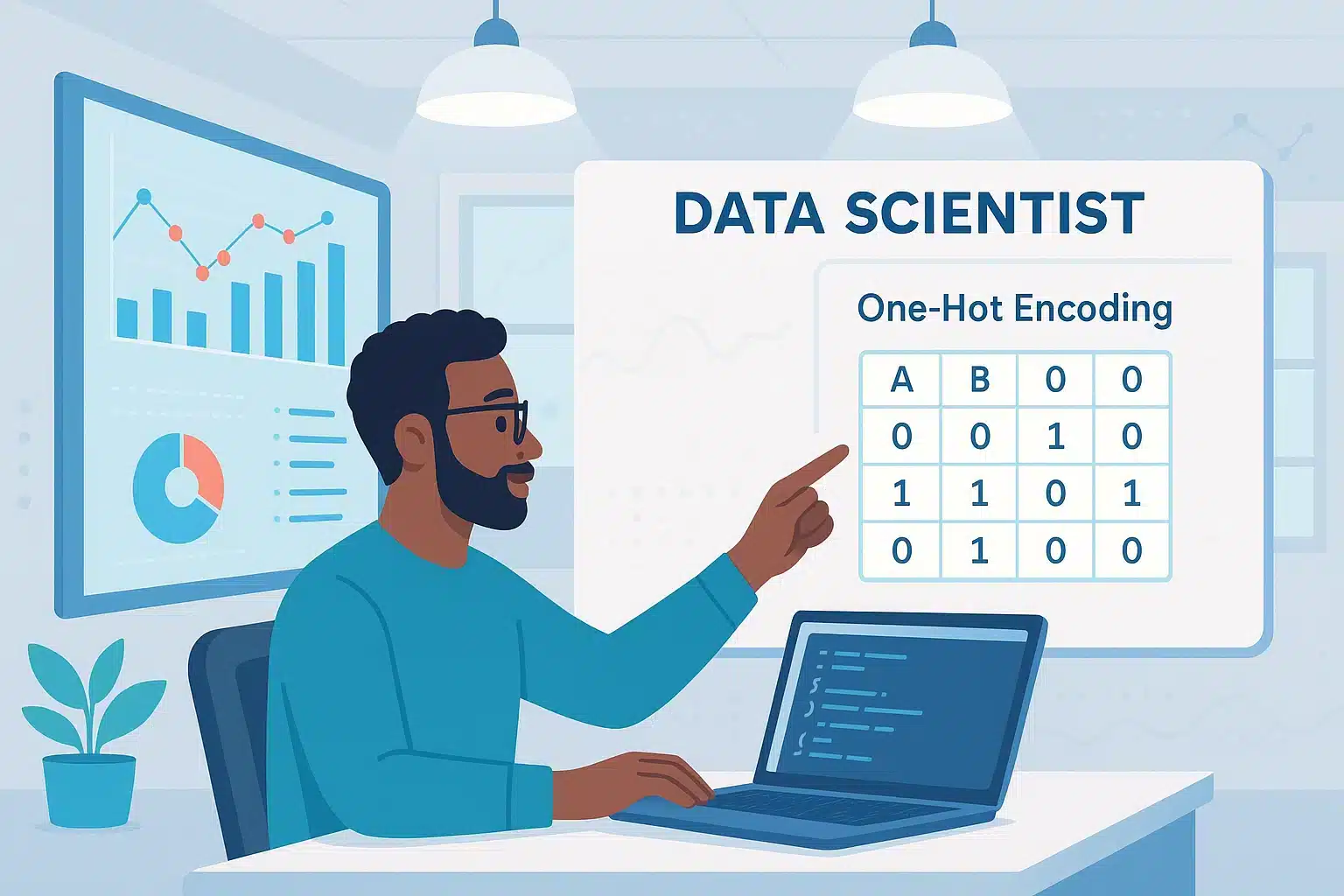

One-Hot Encoding, which might be translated as “binary encoding”, is based on a simple yet remarkably effective idea: create a column for each possible value of a variable, then activate only the one that corresponds to the observed data, filling the others with zeros.

Consider an example: a “color” column with values “red,” “green,” and “blue.” In One-Hot Encoding, you create three columns: color_red, color_green, and color_blue. If a row contains “red,” you put a 1 in color_red, and 0s in the other two. If it’s “blue,” it will be 1 in color_blue, and 0 elsewhere. The benefit? It prevents a fictional order among the categories. Encoding “red” = 1, “green” = 2, “blue” = 3 might lead an algorithm to think “blue” > “green” > “red”… although these values have no ordinal meaning.

Through One-Hot Encoding, each category is treated as a standalone entity, without any numerical or hierarchical association with the others. This prevents models from introducing bias related to a false data structure. This type of encoding is therefore perfectly suited to nominal categorical variables, meaning without any order logic (such as gender, city, or contract type).

Yet, this approach also has its drawbacks, especially when the number of categories escalates.

Implementation in Python

One-Hot Encoding is neat, devoid of hierarchy bias, and compatible with all models. However, it has a significant structural flaw: it creates a multitude of columns.

For a practical example, consider working with an ecommerce dataset where a “product” variable contains 1,200 different references. Post-One-Hot Encoding, you will have 1,200 columns. This is more than an aesthetic issue. It becomes a time bomb for your model. Two specific issues arise.

First, a dimensionality explosion, as each new category creates a column, considerably burdening the model, extending training time, and potentially leading to overfitting, particularly with sensitive models like KNNs or decision trees.

Second issue: extreme sparsity. In a matrix of 1,200 columns, there is only one 1 per row, leading to over 95% zeros in most cases. Storing this in a dense format poses a challenge for your RAM!

Therefore, One-Hot Encoding is not scalable without caution. When dealing with high cardinality variables, it’s crucial to think twice before opting for “encode.”

How far can we go? Critical thresholds to know

At what point should One-Hot Encoding be avoided due to a high number of modalities? No absolute rule exists, but community feedback suggests: for more than 10 to 15 unique categories, consider alternatives.

Several problems accrue. Multicollinearity, with the notorious “dummy trap”. Creating a column per modality introduces total redundancy (the sum of all columns always gives 1). Some models may suffer from this, especially linear regressions. Solution: remove a reference column.

Instability with small datasets. With limited observations but numerous categories, the risk of overfitting is maximal. Plus, data can be unbalanced. Some categories might appear just once or twice, creating almost-empty columns that are unusable.

In essence, the more modalities, the more One-Hot becomes a double-edged sword. Fortunately, it’s not the only tool in a data scientist’s arsenal.

When to use One-Hot Encoding… or not

Selecting the right encoding method is critical. Here are cases where One-Hot Encoding is appropriate… and others where it should be avoided.

This approach suits variables with few unique modalities (country, gender, type of contract…), or with balanced data, without rare categories.

It’s also ideal for simple or linear models (logistic regression, SVM, perceptron), or for explainable use cases: churn, customer scoring, marketing.

Conversely, it should be avoided for columns with numerous categories. Ditto for sparsely populated datasets or those with many missing values.

Smart alternatives

When category numbers balloon, One-Hot Encoding reveals its limitations. Fortunately, the machine learning ecosystem provides several alternatives, each suited for specific use cases.

With Label Encoding, each category is replaced by a unique integer. Simple and fast, but best avoided with linear or distance-based models, as it introduces an artificial order (“green” = 1, “red” = 2, “blue” = 3…). Yet, it’s effective for decision trees, which are order-insensitive.

Another strategy is Target Encoding, also known as Mean Encoding. Each category is replaced by that category’s average target variable. For instance: if “Premium” customers purchase at an average of 300 €, encode “Premium” with 300. This is powerful for high-cardinality variables. However, beware of data leakage risk if employed without proper cross-validation. Also, be cautious of overfitting.

Moreover, popularized by deep learning, “embeddings” convert categories into model-learned continuous vectors. Each modality becomes a point in a vector space, near others with similar behaviors. It’s ultra-effective on vast datasets, particularly in NLP or product recommendations.

Lastly, there is Feature Hashing. Instead of explicitly encoding each category, it uses a hash function to assign categories to columns within a fixed number. Less readable but effective in avoiding column explosion. Handle with caution, as it might cause several categories to accumulate in the same “bucket,” leading to a “hash collision”.

With these various strategies, choose the most fitting one according to dataset size, model used, and category nature.

One-Hot Encoding and Deep Learning

In deep learning frameworks, one might think One-Hot Encoding is obsolete. Still, it remains extensively used… in certain cases. It’s valuable in simple classification tasks with few classes, or when preprocessing short sequences (e.g.: characters or tokens in NLP). It’s also relevant where there’s no learnable embedding or limited data.

However, as soon as deeper issues are encountered (NLP, recommendation, user data…), embeddings are preferred, being more compact, meaningful, and model-learned. A typical instance: instead of converting each word into an expansive binary column, we use a 300-dimension vector embedding that captures meaning, context, and similarities.

One-Hot Encoding, a straightforward method to encode your categorical variables

One-Hot Encoding can be considered the Swiss army knife for novice data scientists: easy to understand, compatible with nearly all models, and robust across a broad range of practical cases. However, pitfalls lurk beneath this simplicity: proliferating columns, sparse matrices, overfitting, multicollinearity… all reasons not to use it naively. Well-guided, well-calibrated, it remains an essential preprocessing standard. Misapplied, it can be a drag on performance.

To deepen your understanding of data preprocessing techniques, encoding, machine learning, and deep learning, look no further than the Artificial Intelligence training courses from DataScientest. Our comprehensive programs will empower you to understand AI model foundations, manipulate real datasets, and implement complete pipelines with Scikit-learn and TensorFlow. You will also learn to utilize the best of each method like One-Hot, embeddings, or PCA.

Through a practice-oriented approach and concrete cases, you will be equipped to deploy high-performance models and obtain a recognized certification. Our courses adapt to your pace: whether intensive BootCamp, continuous format, or alternation, with funding options like CPF or France Travail. Join DataScientest and make sense of the data!

You now know everything about One-Hot Encoding. For further information on this topic, see our comprehensive article on Category Encoders and our article on Deep Learning.